Quote of the Day

“He is the man who sits in the outer office of the White House hoping to hear the President sneeze”.

- H.L. Mencken, writing about the Vice President, 29 January 1956.

Musical alternative to the morning’s radio news

Anne-Sophie Mutter, Daniel Barenboim, Yo-Yo Ma:

Beethoven: Triple Concerto in C Major, Op. 56 No. 2

5 minutes and 22 seconds of pure bliss.

Note: I’ve decided that the embedded links that I’ve been providing up to now create more problems for some readers than they’re worth. So henceforth each musical interlude will just have a simple URL link. Often, simplest is best.

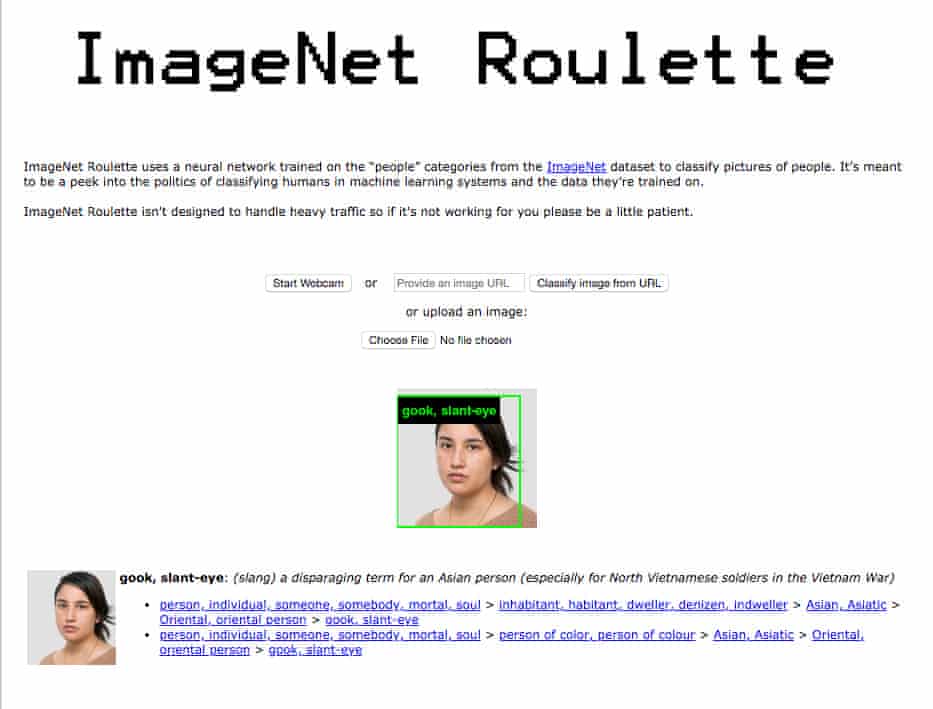

A European at Stanford

Terrific New Yorker profile of the Dutch politician (and former MEP) Marietje Schaake and what she found when she entered the belly of the Silicon Valley beast.

In conversation and lectures, Schaake often describes herself as an alien, as if she were an anthropologist from a distant world studying the local rites of Silicon Valley. Last fall, not long after she’d settled in, she noticed one particularly strange custom: at parties and campus lectures, she would be introduced to people and told their net worth. “It would be, like, ‘Oh, this is John. He’s worth x millions of dollars. He started this company,’ ” she said. “Money is presented as a qualification of success, which seems to be measured in dollars.” Sometimes people would meet her and launch directly into pitching her their companies. “I think people figure, if you’re connected with Stanford, you must have some interest in venture capital and startups. They don’t bother to find out who you are before starting the sales pitch.”

These experiences spoke to a pervasive blurring between the corporate and the academic, which she saw almost everywhere at Stanford. The university is deeply embedded in the corporate life of Silicon Valley and has been directly enriched by many of the companies that Schaake would like to see regulated more heavily and broken apart; H.A.I., according to one of its directors, receives roughly thirteen per cent of its pledged gifts from tech firms, and a majority of its funding from individuals and companies. The names of wealthy donors on buildings and institutes,the department chairs endowed by corporations, the enormous profits from high tuition prices—none of this happened at her alma mater, the University of Amsterdam, where tuition is highly subsidized and public funding supports the operating expenses of the university. (The University of Amsterdam, of course, is not internationally known as an incubator of startups and a hotbed of innovation.) Beyond Stanford, the contrasts seemed just as stark. Roughly sixty per cent of housing in Amsterdam is publicly subsidized. The main street running through Palo Alto, by contrast, is lined with dozens of old R.V.s, vans, and trailers, in which many semi-homeless service workers live. The public middle school in Menlo Park, where Schaake now resides, has students who are homeless, although the area’s average home value is almost $2.5 million.

When I first heard that she was going to Stanford, I feared for her sanity. Having read this, I think she’ll be ok. Her bullshit detector is still in good working order.

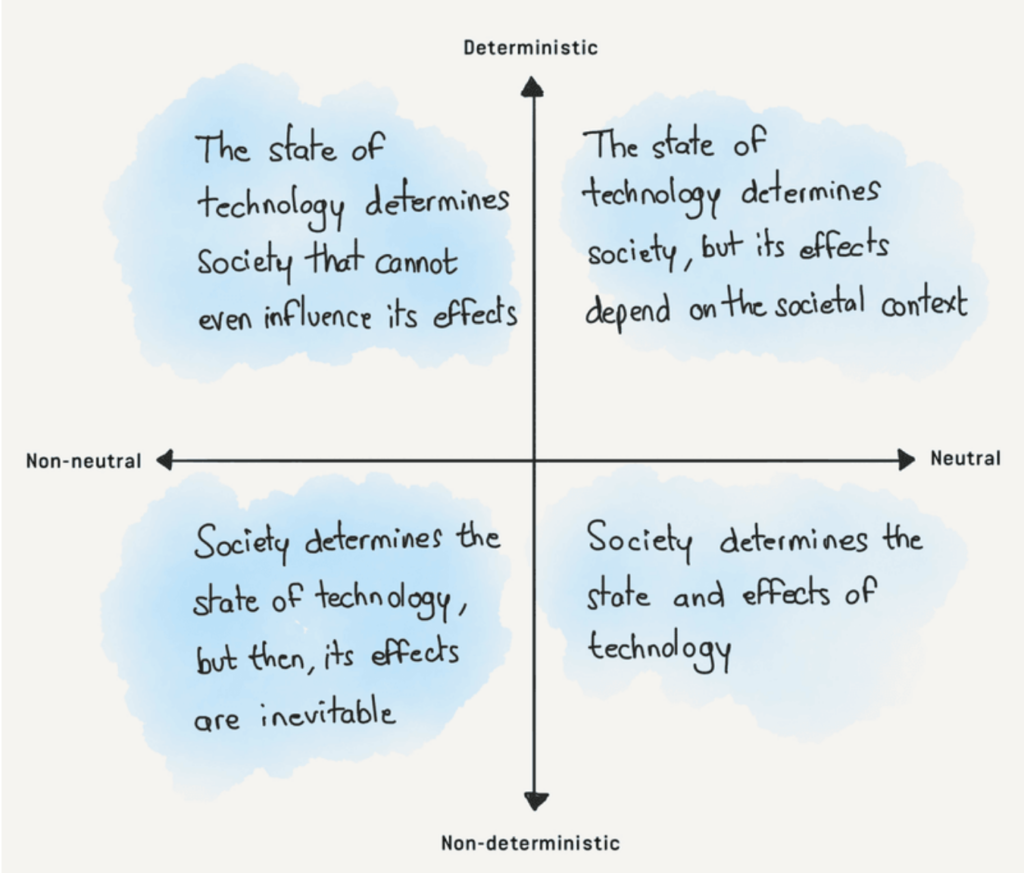

Scream if you want to go faster: Johnson in Cummingsland

This is my long read of the day. Terrific essay by Rachel Coldicutt

The emergence of a patchwork of UK innovation initiatives over the last few months is notable. Rather than fiddling with increments of investment, there is a commitment to large-scale, world-leading innovation and enthusiasm for the potential of data.

But there is also a culture of opacity and bluster, a repeated lack of effectiveness, and a tendency to do secret deals with preferred suppliers. Taken together with the lack of a public strategy, this has led to a lot of speculation, a fair few conspiracy theories, and a great deal of concern about the social impact of collecting, keeping, and centralising data.

But it seems very possible that there is actually no big plan — conspiratorial or otherwise. In going through speeches and policy documents, I have found no vision for society —save the occasional murmur of “Levelling Up” — and plenty of evidence of a fixation with the mechanics of government.

This is a technocractic revolution, not a political one, driven by a desire to obliterate bureaucracy, centralise power, and increase improvisation.

And this obsession with process has led to a complete disregard for outcomes.

The thing about Cummings — and the data-analytics crowd generally — is that they know nothing of how society actually works, and subscribe to a crippled epistemology which leads them to think that the more data you have, the more perfect your knowledge of the world.

Actually, most of them don’t even realise they have an epistemology.

Furloughed Brits got paid not to work—but two-thirds of them worked anyway

From Quartz:

Economists at the universities of Oxford, Zurich, and Cambridge looked into the UK furlough program, which supports one-third of the country’s workforce, accounting for more than 9 million jobs, furloughed by mid-June 2020. Under the scheme, the UK government pays workers up to 80% of their salary for a limited period of time, allowing companies to retain them without paying them—though companies were allowed to top up the government money.

Until July 1st, the plan also specifically prohibited workers from working for their employers when on the scheme. But the researchers, who surveyed over 4,000 people in two waves in April and May 2020, discovered a striking fact: Only 37% of furloughed workers reported doing no work at all for their employers during that time.

In some sectors, the imperative to work definitely came from employers. In the the sector termed “computer and mathematical,” 44% of those surveyed said they had been asked to work despite being furloughed.

But it also seems that many employees chose to work because they wanted to. Two-thirds of all workers said they had done some work despite being on furlough, even though only 20% were actually asked to. Perhaps unsurprisingly, those on higher salaries, those able to work from home, and those with the most flexible contracts were most likely to do some work.

One in five college students don’t plan to go back this fall

As the coronavirus pandemic pushes more and more universities to switch to remote learning — at least to start — 22% of college students across all four years are planning not to enroll this fall, according to a new College Reaction/Axios poll.

Among other things, the report claims that 20% of Harvard undergraduates have decided to defer for a year. Harvard (a hedge fund with a nice university attached) can ride out that kind of dropout, but many poorer institutions will struggle.

Summer books #8

Puligny-Montrachet: Journal of a Village in Burgundy by Simon Loftus, Daunt Books, 2019.

If you like France, or wine, or (like me) both then you’ll enjoy this eccentric but utterly charming social history of the village (well, pair of villages) from which some of the country’s finest dry white wine comes. Loftus is a very good social historian, and his account of what are, in most respects, unglamorous villages is both affectionate and unsentimental. Some good friends of mine, when driving home to Holland from Provence, always used to have an overnight stop in Puligny, from which they would depart the following morning with a car boot full of the most wonderful wine. My fond hope is that, when the plague recedes a bit, we might one day do the same.

This blog is also available as a daily email. If you think this might suit you better, why not subscribe? One email a day, delivered to your inbox at 7am UK time. It’s free, and there’s a one-click unsubscribe if you decide that your inbox is full enough already!