Lovely project, based in Cambridge.

Category Archives: Open Source

Hmmm.. birthday greetings from M$soft

Video here. (Embed code doesn’t seem to work.)

Wheels within wheels

This is lovely: a PC emulator (running Linux) written in Javascript and running in my Firefox browser on a Mac. Written by Fabrice Bellard.

Thanks to Jon Crowcroft for the link.

Android’s fragmentation problem

One of my boys has recently adopted my Android phone after his 6-year-old Motorola handset finally gave up the ghost, and it’s been interesting to observe his reactions. On the one hand, he’s charmed by finally having a handheld device that connects properly to the Net and the Web. But his experiences with Android Apps mirror mine, namely that there not much quality control, great variability and many Apps won’t work with lots of handsets. In fact, he’s experiencing the problems that finally drove me to get an iPhone.

What he hasn’t experienced yet, though, is the maddening control-freakery of the mobile carriers in relation to updating the OS on the handset. First of all, they accept no responsibility for the OS; and secondly, even when they grudgingly offer some upgrade facility, it’s often flaky and sometimes requires serious geek skills to implement. A friend of my daughter’s has the same Android handset (a t-mobile Pulse) and when I asked her what version of the OS it was running she said “I think it’s 2 point something”. Surprised (because she is not in the least geeky), I asked her how she’d done the upgrade from the version 1.5 that’s running on my handset. She replied that her brother — who is an engineering student and a real geek — does the upgrades for her. But then she added: “the only problem is that it crashes a bit after he’s done the upgrade”.

Dan Gillmor has an interesting piece in Salon.com in which he explores some of these issues.

The first problem, as I noted in a recent post, is that Google has given the mobile carriers nearly total control over the phones they sell — including the software. In the process, they’re taking Android — an open-source operating system when it gets to the carrier — and turning it into an operating system that removes user choice, by adding software that locks down the devices in ways that are even worse, in some respects, than the famous Apple control-freakery. At least Apple doesn’t load crapware — mostly unwanted, unneeded and un-removable software — onto the iPhone and iPad, as the carriers are doing with their Android devices. This has forced users to jailbreak their Android phones, a perversion of the very idea of openness.

We’ve seen the consequences of mixing manufacturer control-freakery with open source OSs already in the Netbook market, with every vendor offering its own infuriating version of Linux Lite. I’m tired of having to clear the disk of every Netbook I try in order to install Ubuntu. But at least the Ubuntu people take responsibility for their distribution, and they’re very helpful in relation to different brands of Netbook. Google should do the same for Android.

Why Louis Grey turned In his iPhone and went for Android

Long, thoughtful post by Louis Gray.

For me, more than the over-used phrase of "open", the promise of true multitasking, and the platform's integration with Google Apps, was one word – "Choice". Choice of handsets. Choice of carriers. Choice of manufacturers. Second behind the word choice has to be "Momentum". I can see that Android has momentum in terms of improved quality, in terms of the number of devices sold and users, and yes, applications, which are growing in quantity, soon to be followed by quality. I really do believe that if Android does not already have a market share lead over Apple yet in this discussion, they soon will. It is inevitable. The growth in the number of handsets, carriers and users will drive more developers to the platform, and the holdouts who are not there will eventually make the move. And yes, third is "Cloud" – the idea that I don't need to be tied to my desktop computer to manage data on the phone, but instead, the phone is built to tap into data stored on the Web. Fourth is "Capability". The Android platform, as the Droid commercials offer, simply does more. The power of the mobile hotspot cannot be understated, and the iPhone is a zero there…

Worth reading in full.

The economics of peer review

My Open University colleague Martin Weller has done some interesting calculations of the cost of the academic peer-review process.

Peer-review is one of the great unseen tasks performed by academics. Most of us do some, for no particular reward, but out of a sense of duty towards the overall quality of research. It is probably a community norm also, as you become enculturated in the community of your discipline, there are a number of tasks you perform to achieve, and to demonstrate, this, a number of which are allied to publishing: Writing conference papers, writing journal articles, reviewing.

So it's something we all do, isn't really recognised and is often performed on the edges of time. It's not entirely altruistic though – it is a good way of staying in touch with your subject (like a sort of reading club), it helps with networking (though we have better ways of doing this now don't we?) and we also hope people will review our own work when the time comes. But generally it is performed for the good of the community (the Peer Review Survey 2009 states that the reason 90% reviewers gave for conducting peer review was "because they believe they are playing an active role in the community")

It’s a labour that is unaccounted for. The Peer Review Survey doesn’t give a cost estimate (as far as I can see), but we can do some back of the envelope calculations. It says there are 1.3 million peer-reviewed journals published every year, and the average (modal) time for review is 4 hours. Most articles are at least double-reviewed, so that gives us:

Time spent on peer review = 1,300,000 x 2 x 4 = 10.4 million hours

This doesn’t take into account editor’s time in compiling reviews or chasing them up, we’ll just stick with the ‘donated’ time of academics for now. In terms of cost, we'd need an average salary, which is difficult globally. I’ll take the average academic salary in the UK, which is probably a touch on the high side. The Times Higher gives this as £42,000 per annum, before tax, which equates to £20.19 per hour. So the cost with these figures is:

20.19 x 10,400,000 = £209,976,000

Martin points out the implication of this — that academics are donating over £200 million a year of their time to the peer review process. “This isn’t a large sum when set against things like the budget deficit”, he continues,

“but it’s not inconsiderable. And it’s fine if one views it as generating public good – this is what researchers need to do in order to conduct proper research. But an alternative view is that academics (and ultimately taxpayers) are subsidising the academic publishing to the tune of £200 million a year. That’s a lot of unpaid labour.

Now that efficiency and return on investment are the new drivers for research, the question should be asked whether this is the best way to ‘spend’ this money? I’d suggest that if we are continuing with peer review (and its efficacy is a separate argument), then the least we should expect is that the outputs of this tax-payer funded activity should be freely available to all.

And so, my small step in this was to reply to the requests for reviews stating that I have a policy of only reviewing for open access journals. I’m sure a lot of people do this as a matter of course, but it’s worth logging every blow in the revolution. If we all did it….”

Yep.

End of the road for H.264?

This clipping from GMSV’s coverage of the Google Developers’ conference is interesting.

The announcement with the biggest implications down the road was the unveiling of WebM, an open-source, royalty-free video codec based on VP8. It’s being positioned as the standard for video in HTML5 rather than the proprietary H.264 or the royalty-free but problematic Theora. Yep, you with the glazing eyes: Adoption of H.264 could mean fees imposed on content distributors and providers, though so far the license holders have waived collection. Those license holders include Microsoft and Apple — and Apple is the notable abstainer in the chorus of support for VP8. Could get interesting.

Yep. And the most interesting thing about it is that it’s open source.

Crowdsourcing, open source and sloppy terminology

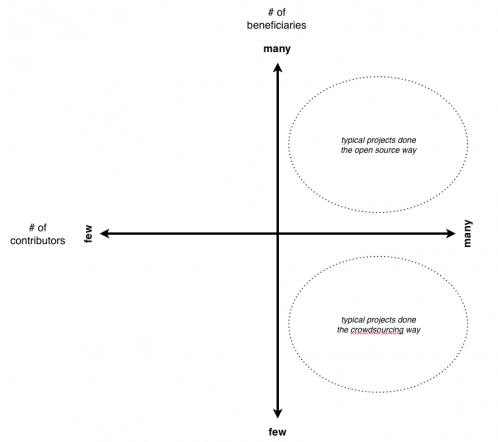

It’s funny how often terms like ‘open source’ and ‘crowdsourcing’ find their way into everyday discourse, where they are used casually to mean anything that involves lots of people. This diagram comes from a thoughtful post by Chris Grams. It begins:

It finally hit me the other day just why the open source way seems so much more elegantly designed (and less wasteful) to me than what I’ll call “the crowdsourcing way”.

1. Typical projects run the open source way have many contributors and many beneficiaries.

2. Typical projects run the crowdsourcing way have many contributors and few beneficiaries.

Worth reading in full. Thanks to Glyn Moody for spotting it.

Tim Bray: Now A No-Evil Zone

Tim Bray has jumped ship — from Oracle to Google. And he’s there to work on Android and compete with Apple.

The iPhone vision of the mobile Internet’s future omits controversy, sex, and freedom, but includes strict limits on who can know what and who can say what. It’s a sterile Disney-fied walled garden surrounded by sharp-toothed lawyers. The people who create the apps serve at the landlord’s pleasure and fear his anger.

I hate it.

I hate it even though the iPhone hardware and software are great, because freedom’s not just another word for anything, nor is it an optional ingredient.

The big thing about the Web isn’t the technology, it’s that it’s the first-ever platform without a vendor (credit for first pointing this out goes to Dave Winer). From that follows almost everything that matters, and it matters a lot now, to a huge number of people. It’s the only kind of platform I want to help build.

Apple apparently thinks you can have the benefits of the Internet while at the same time controlling what programs can be run and what parts of the stack can be accessed and what developers can say to each other.

I think they’re wrong and see this job as a chance to help prove it.

Hooray! Interesting times ahead. And he’s a photographer too.

Valuing Open Source software

From Slashdot:

“The Linux kernel would cost more than one billion EUR (about 1.4 billion USD) to develop in European Union. This is the estimate made by researchers from University of Oviedo (Spain), whereby the value annually added to this product was about 100 million EUR between 2005 and 2007 and 225 million EUR in 2008. Estimated 2008 result is comparable to 4% and 12% of Microsoft’s and Google’s R&D expenses on whole company products. Cost model ‘Intermediate COCOMO81’ is used according to parametric estimations by David Wheeler. An average annual base salary for a developer of 31,040 EUR was estimated from the EUROSTAT. Previously, similar works had been done by several authors estimating Red Hat, Debian, and Fedora distributions. The cost estimation is not of itself important, but it is an important means to and end: that commons-based innovation must receive a higher level of official recognition that would set it as an alternative to decision-makers. Ideally, legal and regulatory framework must allow companies participating on commons-based R&D to generate intangible assets for their contribution to successful projects. Otherwise, expenses must have an equitable tax treatment as a donation to social welfare.”

Thanks to Glyn Moody for spotting it.