Mark Zuckerberg’s ‘defence’ of Facebook’s role in the election of Trump provides a vivid demonstration of how someone can have a very high IQ and yet be completely clueless — as Zeynep Tufecki points out in a splendid NYT OpEd piece:

Mr. Zuckerberg’s preposterous defense of Facebook’s failure in the 2016 presidential campaign is a reminder of a structural asymmetry in American politics. It’s true that mainstream news outlets employ many liberals, and that this creates some systemic distortions in coverage (effects of trade policies on lower-income workers and the plight of rural America tend to be underreported, for example). But bias in the digital sphere is structurally different from that in mass media, and a lot more complicated than what programmers believe.

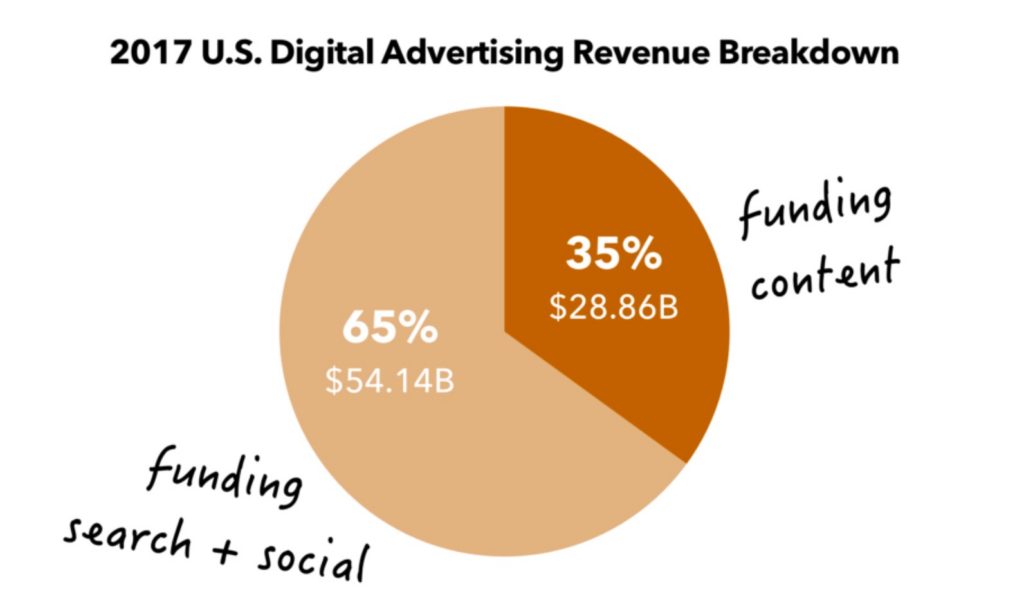

In a largely automated platform like Facebook, what matters most is not the political beliefs of the employees but the structures, algorithms and incentives they set up, as well as what oversight, if any, they employ to guard against deception, misinformation and illegitimate meddling. And the unfortunate truth is that by design, business model and algorithm, Facebook has made it easy for it to be weaponized to spread misinformation and fraudulent content. Sadly, this business model is also lucrative, especially during elections. Sheryl Sandberg, Facebook’s chief operating officer, called the 2016 election “a big deal in terms of ad spend” for the company, and it was. No wonder there has been increasing scrutiny of the platform.