- Data protection experts want watchdog to investigate Conservative and Labour parties They’re probably contravening the GDPR by buying Experian data and then using it for targeting voters.

- Why the cost of education and healthcare continues to rise Essentially because of Baumol’s cost disease. Useful explainer.

- The ethics of algorithms: Mapping the debate Really helpful framework for thinking about this.

- There are bots: look around Terrific essay by Renee DiResta on the parallels between automated trading in financial markets and in the so-called “marketplace of ideas”.

Quote of the Day

“When people have money, they convert it into emissions. That’s what wealth is.”

- Simon Kuper, writing in today’s Financial Times

Johnson’s plans for Singapore-on-Thames

Terrific FT scoop today:

The British government is planning to diverge from the EU on regulation and workers’ rights after Brexit, despite its pledge to maintain a “level playing field” in prime minister Boris Johnson’s deal, according to an official paper shared by ministers this week.

The government paper drafted by Dexeu, the Brexit department, with input from Downing Street stated that the UK was open to significant divergence, even though Brussels is insisting on comparable regulatory provisions.

The issue will come to a head when the UK begins the next phase of talks with the EU to forge a new trade deal. However, the UK in effect still faces the prospect of a no-deal Brexit next week unless EU states agree a new extension date for when the UK will leave the bloc. France was on Friday pushing for a shorter extension date than the one Mr Johnson has requested.

In a passage that could alarm Labour MPs who have backed the Brexit bill, the leaked government document also said the drafting of workers’ rights and environmental protection commitments “leaves room for interpretation”.

The paper, titled “Update to EPSG on level playing field negotiations”, appears to contradict comments made by Mr Johnson on Wednesday when he said the UK was committed to “the highest possible standards” for workers’ rights and environmental standards.

Chlorinated chickens and other delights beckon.

Autumn books

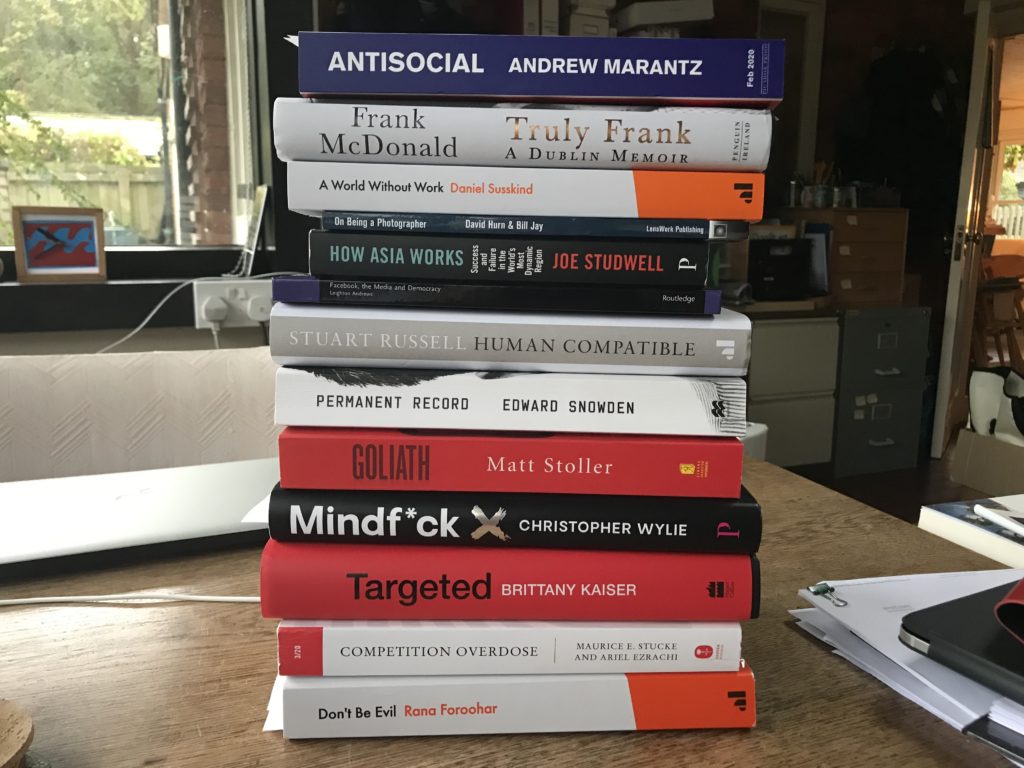

In one of my periodic attempts to impose order on my study I rounded up all the books I have been

- reading

- reviewing

- need to read for work

- want to read for pleasure

And, having done so, wondered about my sanity.

From the top down…

- Antisocial: Online Extremists, Techno-Utopians, and the Hijacking of the American Conversation by Andrew Marantz. Just arrived yesterday. Described by Jaron Lanier as “a close-up portrait of the new species of online shock artists who have taken over the American conversation”. I was going to say that I’m looking forward to reading it, but perhaps it’d be more honest to say that I’m gritting my teeth because it’s something I have to read for work.

- Truly Frank: a Dublin Memoir by Frank McDonald. This one is purely for pleasure. Frank was one of the great journalists on the Irish Times, is an old friend and was a Wolfson Press Fellow back in the day.

- A World without Work: Technology, Automation and How We Should Respond by Daniel Susskind. This one is for work but won’t be out until January. I’ll probably review it.

- On Being a Photographer: a practical guide by David Hurn and Bill Jay (Lenswork Publishing, 2009). This is a book I should have known about but didn’t until a fellow-photographer told me about it a few weeks ago.

- How Asia Works: Success and Failure in the World’s Most Dynamic Region by Joe Studwell, recommended to me as a must-read by Nicholas Colin, who I’ve always found to be a terrific source of ideas. This is to fill one of the numerous gaps in my knowledge of the world.

- Facebook, the Media and Democracy: Big Tech, Small State? by Leighton Andrews. An interesting monograph on a topic I often write about.

- Human Compatible: AI and the Problem of Control by Stuart Russell. One of the best books about AI that I’ve read. My review of it for the Literary Review is here

- Permanent Record by Edward Snowden. Terrific memoir, which I reviewed for the Observer.

- Goliath: The 100-year war between Monopoly Power and Democracy by Matt Stoller. Just arrived. I’m looking forward to reviewing it.

- Mindfu-k: Inside Cambridge Analytica’s Plot to Break the World by Christopher Wylie. Terrific account by a geek who was an insider on how data analytics influenced Brexit and the 2016 Presidential election. I’ve reviewed it alongside Brittany Kaiser’s book Targeted: My Inside Story of Cambridge Analytica and How Trump, Brexit and Facebook Broke Democracy. Review will be out on Monday 28 October.

- Competition Overdose: How Free-market Mythology Transformed Us from Citizen Kings to Market Servants by Maurice Stucke and Ariel Ezrachi. Maurice and Ariel wrote a terrific book *Virtual Competition: the Problems and Perils of the Algorithm-driven Economy a few years back. When they told me they were contemplating a critical book on competition, I urged them on. Now they’ve done it, much to my admiration and delight. This is an advanced proof which I’m currently enjoying. Publication date is April 2020.

- Don’t Be Evil: The Case against Big Tech by Rana Foroohar. I’m reviewing it for the Observer.

Linkblog

- Dismembering Big Tech: breaking up may be hard to do

- J.S. Bach the rebel Yeah, but most great artists were regarded as dodgy by their contemporaries. We now think of James Joyce as a great modernist writer. But in the Dublin of the 1920s he was held to be a bloody pornographer.

- Sue Halpern on the problem of political advertising on social media

- Facebook, free speech and power

Zuckerberg’s ideology

Facebook’s announcement that it will include Breitbart in its select list of ‘curated’ news sources speaks volumes. Charlie Wardle has an intelligent take on it in the New York Times:

Because Mr. Zuckerberg is one of the most powerful people in politics right now — and because the stakes feel so high — there’s a desire to assign him a political label. That’s understandable but largely beside the point. Mark Zuckerberg may very well have political beliefs. And his every action does have political consequences. But he is not a Republican or a Democrat in how he wields his power. Mr. Zuckerberg’s only real political affiliation is that he’s the chief executive of Facebook. His only consistent ideology is that connectivity is a universal good. And his only consistent goal is advancing that ideology, at nearly any cost.

Yep. The only thing he really cares about is growth in the number of users of Facebook, and the engagement they have with the platform. And the collateral damage of that is someone else’s problem. This is sociopathy on steroids.

Linkblog

- Why driverless cars will mostly be shared, not owned.

- A conversation between Henry Farrell and Tyler Cowen Henry is one of the most interesting people I read, and Tyler Cowen is an omnivorous thinker and writer.

- US nuclear weapons command and control systems no longer run on 8-inch floppy disks Phew! Rest easy, Earthlings.

- History of AI Research: Essential Papers and Developments Useful resource.

Ratmobiles

Now you really couldn’t make this up:

Researchers at the University of Richmond in the US taught a group of 17 rats how to drive little plastic cars, in exchange for bits of cereal.

Study lead Dr Kelly Lambert said the rats felt more relaxed during the task, a finding that could help with the development of non-pharmaceutical treatments for mental illness.

The rats were not required to take a driving test at the end of the study.

Linkblog

- BBC News site is now available on Tor

- How Facebook bought a (public) police force

- Social media has NOT destroyed a generation Or, at any rate, there’s no good empirical evidence that it has.

- Google boss on his company achieving ‘Quantum supremacy

- But IBM begs to disagree Silly argument IMHO.

Linkblog

- British public opinion(s) on autonomous vehicles

- The Odyssey in Limerick form Lovely.

- Trailer for Steve Bannon’s next film For a fuller explanation (and the Huawei connection) see here.

- Theranos could have been stopped Make that “should have been”.