Category Archives: Technology

After the perfect picture, what?

Photography (in the technical rather than aesthetic sense) was once all about the laws of physics — wavelengths of different kinds of light, quality of lenses, refractive indices, coatings, scattering, colour rendition, depth of field, etc.) And initially, when mobile phones started to have cameras, those laws bore down heavily on them: they had plastic lenses and tiny sensors with poor resolution and light-gathering properties. So the pictures they produced might be useful as mementoes, but were of no practical use to anyone interested in the quality of images. And given the constraints of size and cost imposed by the economics of handset manufacture and marketing there seemed to be nothing much that anyone could do about that.

But this view applied only to hardware. The thing we overlooked is that smartphones were rather powerful handheld computers, and it was possible to write software that could augment — or compensate for — the physical limitations of the cameras.

I vividly remember the first time this occurred to me. It was a glorious late afternoon years ago in Provence and we were taking a friend on a drive round the spectacular Gorges du Verdon. About half-way round we stopped for a drink and stood contemplating the amazing views in the blazing sunlight. I reached for my (high-end) digital camera and fruitlessly struggled (by bracketing exposures) to take some photographs that could straddle the impossibly wide dynamic range of the lighting in the scene .

Then, almost as an afterthought, I took out my iPhone, realised that I had downloaded a HDR app, and so used that. The results were flawed in terms of colour balance, but it was clear that the software had been able to manage the dynamic range that had eluded my conventional camera. It was my introduction to what has become known as computational photography — a technology that has come on in leaps and bounds ever since that evening in Provence. Computational photography, as Benedict Evans puts it in a perceptive essay, ”Cameras that Understand”, means that

“as well as trying to make a better lens and sensor, which are subject to the rules of physics and the size of the phone, we use software (now, mostly, machine learning or ‘AI’) to try to get a better picture out of the raw data coming from the hardware. Hence, Apple launched ‘portrait mode’ on a phone with a dual-lens system but uses software to assemble that data into a single refocused image, and it now offers a version of this on a single-lens phone (as did Google when it copied this feature). In the same way, Google’s new Pixel phone has a ‘night sight’ capability that is all about software, not radically different hardware. The technical quality of the picture you see gets better because of new software as much as because of new hardware.” Most of how this is done is already — or soon will be — invisible to the user. Just as HDR used to involve launching a separate app, it’s now baked into many smartphone cameras, which do it automatically. Evans assumes that much the same will happen with the ‘portrait mode’ and ‘night sight’. All that stuff will be baked into later releases of the cameras.

“This will probably”, writes Evans,

also go several levels further in, as the camera goes better at working out what you’re actually taking a picture of. When you take a photo on a ski slope it will come out perfectly exposed and colour-balanced because the camera knows this is snow and adjusts correctly. Today, portrait mode is doing face detection as well as depth mapping to work out what to focus on; in the future, it will know which of the faces in the frame is your child and set the focus on them”. So we’re heading for a point at which one will have to work really hard to take a (technically) imperfect photo. Which leads one to ask: what’s next?

Evans thinks that a clue lies in the fact that people increasingly use their smartphone cameras as visual notebooks — taking pictures of recipes, conference schedules, train timetables, books and stuff we’d like to buy. Machine learning, he surmises, can do a lot with those kinds of images.

”If there’s a date in this picture, what might that mean? Does this look like a recipe? Is there a book in this photo and can we match it to an Amazon listing? Can we match the handbag to Net a Porter? And so you can imagine a suggestion from your phone: “do you want to add the date in this photo to your diary?” in much the same way that today email programs extract flights or meetings or contact details from emails.“

Apparently Google Lens is already doing something like this on Android phones.

The inescapable infrastructure of the networked world

This morning’s Observer column:

“Quitting smoking is easy,” said Mark Twain. “I’ve done it hundreds of times.” Much the same goes for smartphones. As increasing numbers of people begin to realise that they have a smartphone habit they begin to wonder if they should do something about the addiction. A few (a very few, in my experience) make the attempt, switching their phones off after work, say, and not rebooting them until the following morning. But almost invariably the dash for freedom fails and the chastened fugitive returns to the connected world.

The technophobic tendency to attribute this failure to lack of moral fibre should be resisted. It’s not easy to cut yourself off from a system that links you to friends, family and employer, all of whom expect you to be contactable and sometimes get upset when you’re not. There are powerful network effects in play here against which the individual addict is helpless. And while “just say no” may be a viable strategy in relation to some services (for example, Facebook), it is now a futile one in relation to the networked world generally. We’re long past the point of no return in our connected lives.

Most people don’t realise this. They imagine that if they decide to stop using Gmail or Microsoft Outlook or never buy another book from Amazon then they have liberated themselves from the tentacles of these giants. If that is indeed what they believe, then Kashmir Hill has news for them…

What makes a ‘tech’ company?

The Blackrock Blog points out that something strange is going on in the investment world.

The Blackrock Blog points out that something strange is going on in the investment world.

MSCI and S&P are updating their Global Industry Classification Standards (GICS), a framework developed in 1999, to reflect major changes to the global economy and capital markets, particularly in technology.

Take Google, a company long synonymous with “tech” and internet software. Google parent Alphabet derives the bulk of its revenue from advertising, but also makes money from apps and hardware, and operates side ventures including Waymo, a unit that makes self-driving cars. Decisions about what makes a “tech” giant are not as simple as they once were.

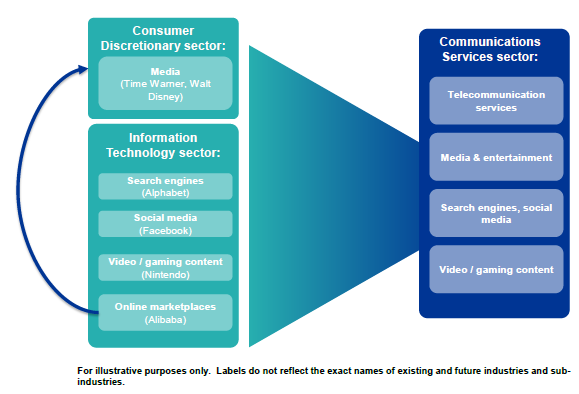

The sector classification overhaul, set in motion last year, will begin in September and affect three of the 11 sector classifications that divide the global stock market. A newly created Communications Services sector will replace a grouping that is currently called Telecommunications Services. The new group will be populated by legacy Telecom stocks, as well as certain stocks from the Information Technology and Consumer Discretionary categories.

What does this mean?

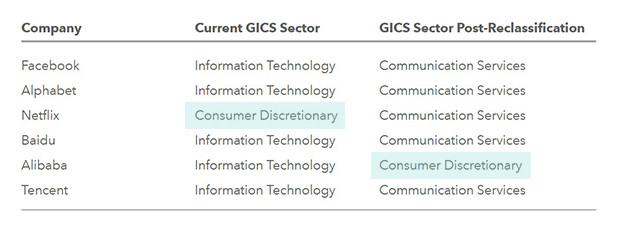

Facebook and Alphabet will move from Information Technology to Communications Services in GICS-tracking indexes. Meanwhile, Netflix will move from Consumer Discretionary to Communications Services. None of what the media has dubbed the FANG stocks (Facebook, Amazon.com, Netflix and Google parent Alphabet) will be classified as Information Technology after the GICS changes, perhaps a surprise to those who think of internet innovation as “tech.” The same applies to China’s BAT stocks (Baidu, Alibaba Group and Tencent). All of these were Information Technology stocks before the changes; none will be after.

Or, in a tabular view:

This change is probably only significant for index funds, but still, it must rather dent the self-image of the ‘tech’ boys to be categorised as merely “communications services”!

What’s in a name?

On my way to Brussels to chair a discussion on Shoshana Zuboff’s The Age of Surveillance Capitalism I fell to reading Leo Marx’s celebrated essay, ”Technology: The Emergence of a Hazardous Concept”, in which he ponders when — and why — the term ‘technology’ emerged. The term — in its modern sense of “the mechanical arts generally” did not enter public discourse until around 1900 “when a few influential writers, notably Thorstein Veblen and Charles Beard, responding to German usage in the social sciences, accorded technology a pivotal role in shaping modern industrial society.”

Marx thinks that, to a cultural historian, some new terms, when they emerge, serve “as markers, or chronological signposts, of subtle, virtually unremarked, yet ultimately far-reaching changes in culture and society.”

His assumption, he writes,

”is that those changes, whatever they were, created a semantic—indeed, a conceptual—void, which is to say, an awareness of certain novel developments in society and culture for which no adequate name had yet become available. It was this void, presumably, that the word technology, in its new and extended meaning, eventually would fill.”

Which brought me back to musing about Zuboff’s new book and why it (and the two or three major essays of hers that preceded it) came as a flash of illumination. Especially the title. What ‘void’ (to use Marx’s idea) does it fill?

On reflection I think the answer lies in the conceptual vacuity of the terms we have traditionally used to describe the phenomenon of digital technology — in particular the trope of “the Fourth Industrial Revolution” beloved of the Davos crowd, or “the digital era” (passim). For one thing these terms are drenched in technological determinism, implying as they do that it’s the technology and its innate affordances that are driving contemporary history. In that sense these cliches are the spiritual heirs of “the age of Machinery” — Thomas Carlyle’s coinage to describe the industrial revolution of his day.

That’s why ‘Surveillance Capitalism’ represents a conceptual breakthrough. It does not assume that our condition is inexorably determined by the innate affordances of digital technology, but by particular ways in which capitalism has morphed in order to exploit it for its own purposes.

So what’s the Killer App for 5G?

If Ben Evans doesn’t know (and he doesn’t, really), then nobody knows.

In 2000 or so, when I was a baby telecoms analyst, it seemed as though every single telecoms investor was asking ‘what’s the killer app for 3G?’ People said ‘video calling’ a lot. But 3G video calls never happened, and it turned out that the killer app for having the internet in your pocket was, well, having the internet in your pocket. Over time, video turned out to be one part of that, but not as a telco service billed by the second. Equally, the killer app for 5G is probably, well, ‘faster 4G’. Over time, that will mean new Snapchats and New YouTubes – new ways to fill the pipe that wouldn’t work today, and new entrepreneurs. It probably isn’t a revolution – or rather, it means that the revolution that’s been going on since 1995 or so keeps going for another decade or more, until we get to 6G.

Shoshana Zuboff’s new book

Today’s Observer carries a five-page feature about Shoshana Zuboff’s The Age of Surveillance Capitalism consisting of an intro by me followed by Q&A between me and the author.

LATER Nick Carr has a perceptive review of the book in the LA Review of Books. John Thornhill also had a good long review in last Saturday’s Financial Times, sadly behind a paywall.

Peak Apple? No: just peak smartphone

This morning’s Observer column:

On 2 January, in a letter to investors, Tim Cook revealed that he expected revenues for the final quarter of 2018 to be lower than originally forecast.

Given that most of Apple’s revenues come from its iPhone, this sent the tech commentariat into overdrive – to the point where one level-headed observer had to point out that the sky hadn’t fallen: all that had happened was that Apple shares were down a bit. And all this despite the fact that the other bits of the company’s businesses (especially the watch, AirPods, services and its retail arm) were continuing to do nicely. Calmer analyses showed that the expected fall in revenues could be accounted for by two factors: the slowdown in the Chinese economy (together with some significant innovations by the Chinese internet giant WeChat); and the fact that consumers seem to be hanging on to their iPhones for longer, thereby slowing the steep upgrade path that had propelled Apple to its trillion-dollar valuation.

What was most striking, though, was that the slowdown in iPhone sales should have taken journalists and analysts by surprise…

Media credulity and AI hype

This morning’s Observer column:

Artificial intelligence (AI) is a term that is now widely used (and abused), loosely defined and mostly misunderstood. Much the same might be said of, say, quantum physics. But there is one important difference, for whereas quantum phenomena are not likely to have much of a direct impact on the lives of most people, one particular manifestation of AI – machine-learning – is already having a measurable impact on most of us.

The tech giants that own and control the technology have plans to exponentially increase that impact and to that end have crafted a distinctive narrative. Crudely summarised, it goes like this: “While there may be odd glitches and the occasional regrettable downside on the way to a glorious future, on balance AI will be good for humanity. Oh – and by the way – its progress is unstoppable, so don’t worry your silly little heads fretting about it because we take ethics very seriously.”

Critical analysis of this narrative suggests that the formula for creating it involves mixing one part fact with three parts self-serving corporate cant and one part tech-fantasy emitted by geeks who regularly inhale their own exhaust…

The real significance of the Apple slide

Apart from the fact that the Chinese economy seems to be faltering and collateral damage from Trump’s ‘trade war’ what the slide signals is that the smartphone boom triggered by Apple with the iPhone is ending because we’re reaching a plateau and apparently there’s no New New Thing in sight. At any rate, that’s Kara Swisher’s take on it:

The last big innovation explosion — the proliferation of the smartphone — is clearly ending. There is no question that Apple was the center of that, with its app-centric, photo-forward and feature-laden phone that gave everyone the first platform for what was to create so many products and so much wealth. It was the debut of the iPhone in 2007 that spurred what some in tech call a “Cambrian explosion,” a reference to the era when the first complex animals appeared. There would be no Uber and Lyft without the iPhone (and later the Android version), no Tinder, no Spotify.

Now all of tech is seeking the next major platform and area of growth. Will it be virtual and augmented reality, or perhaps self-driving cars? Artificial intelligence, robotics, cryptocurrency or digital health? We are stumbling in the dark.

Yep. Situation normal, in other words.