In a sentence: it lumps three very different things — crime, espionage and warfare — under a single heading. And, as I tried to point out in yesterday’s Observer column, instead of making cyberspace more secure many of the activities classified as ‘cyber security’ make it less so.

Bruce Schneier has a thoughtful essay on the subject.

Last week we learned about a striking piece of malware called Regin that has been infecting computer networks worldwide since 2008. It’s more sophisticated than any known criminal malware, and everyone believes a government is behind it. No country has taken credit for Regin, but there’s substantial evidence that it was built and operated by the United States.

This isn’t the first government malware discovered. GhostNet is believed to be Chinese. Red October and Turla are believed to be Russian. The Mask is probably Spanish. Stuxnet and Flame are probably from the U.S. All these were discovered in the past five years, and named by researchers who inferred their creators from clues such as who the malware targeted.

I dislike the “cyberwar” metaphor for espionage and hacking, but there is a war of sorts going on in cyberspace. Countries are using these weapons against each other. This affects all of us not just because we might be citizens of one of these countries, but because we are all potentially collateral damage. Most of the varieties of malware listed above have been used against nongovernment targets, such as national infrastructure, corporations, and NGOs. Sometimes these attacks are accidental, but often they are deliberate.

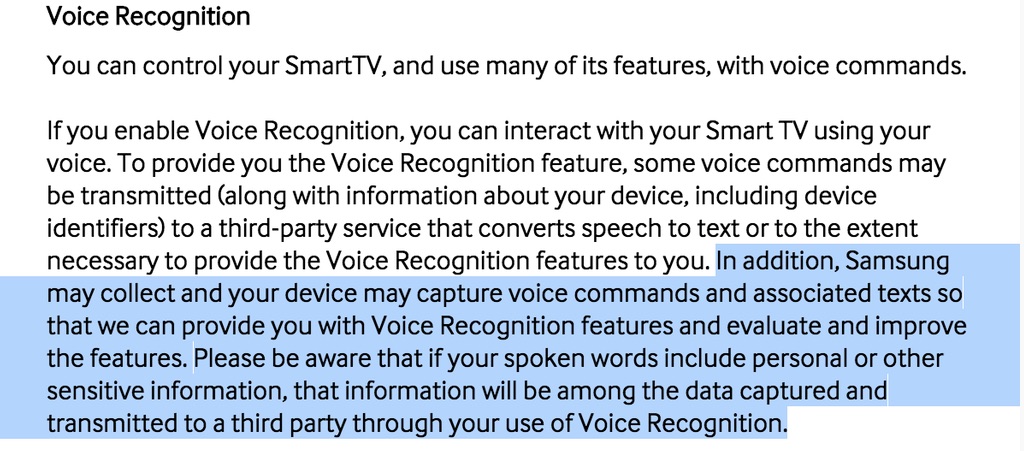

For their defense, civilian networks must rely on commercial security products and services. We largely rely on antivirus products from companies such as Symantec, Kaspersky, and F-Secure. These products continuously scan our computers, looking for malware, deleting it, and alerting us as they find it. We expect these companies to act in our interests, and never deliberately fail to protect us from a known threat.

This is why the recent disclosure of Regin is so disquieting. The first public announcement of Regin was from Symantec, on November 23. The company said that its researchers had been studying it for about a year, and announced its existence because they knew of another source that was going to announce it. That source was a news site, the Intercept, which described Regin and its U.S. connections the following day. Both Kaspersky and F-Secure soon published their own findings. Both stated that they had been tracking Regin for years. All three of the antivirus companies were able to find samples of it in their files since 2008 or 2009.

Yep. Remember that the ostensible mission of these companies is to make cyberspace more secure. By keeping quiet about the Regin threat they did exactly the opposite. So, as Schneier concludes,

Right now, antivirus companies are probably sitting on incomplete stories about a dozen more varieties of government-grade malware. But they shouldn’t. We want, and need, our antivirus companies to tell us everything they can about these threats as soon as they know them, and not wait until the release of a political story makes it impossible for them to remain silent.