Spotted in the college gardens this morning.

How to handle 15 billion page views a month

Ye Gods! Just looked at the stats for Tumblr.

500 million page views a day

15B+ page views month

~20 engineers

Peak rate of ~40k requests per second

1+ TB/day into Hadoop cluster

Many TB/day into MySQL/HBase/Redis/Memcache

Growing at 30% a month

~1000 hardware nodes in production

Billions of page visits per month per engineer

Posts are about 50GB a day. Follower list updates are about 2.7TB a day.

Dashboard runs at a million writes a second, 50K reads a second, and it is growing.

And all this with about twenty engineers!

Web design and page obseity

My Observer column last Sunday (headlined “Graphics Designers are Ruining the Web”) caused a modest but predictable stir in the design community. The .Net site published an admirably balanced round-up of comments from designers pointing out where, in their opinions, I had got things wrong. One (Daniel Howells) said that I clearly “had no exposure to the many wonderful sites that leverage super-nimble, lean code that employ almost zero images” and that I was “missing the link between minimalism and beautiful designed interfaces.” Designer and writer Daniel Gray thought that my argument was undermined “by taking a shotgun approach to the web and then highlighting a single favoured alternative, as if the ‘underdesigned’ approach of Peter Norvig is relevant to any of the other sites he discusses”.

There were several more comments in that vein, all reasonable and reasoned — a nice demonstration of online discussion at its best. Reflecting on them brought up several thoughts:

Thanks to Seb Schmoller for the .Net link.

Want to pay lower interest rates on bonds? Get connected.

Sitting next to me at last week’s lecture by Simon Hampton of Google was David Cleevely who in addition to being a successful entrepreneur is also the Founding Director of the Cambridge Centre for Science and Policy. At one point, Simon put up a slide showing the percentage of GDP contributed by the Internet in a wide range of European countries. As the animation flashed by David whispered to me: “wonder if there’s a correlation between those percentages and bond yields?” After the lecture, he cycled off purposefully into the gathering gloom. I suspected that he was Up To Something.

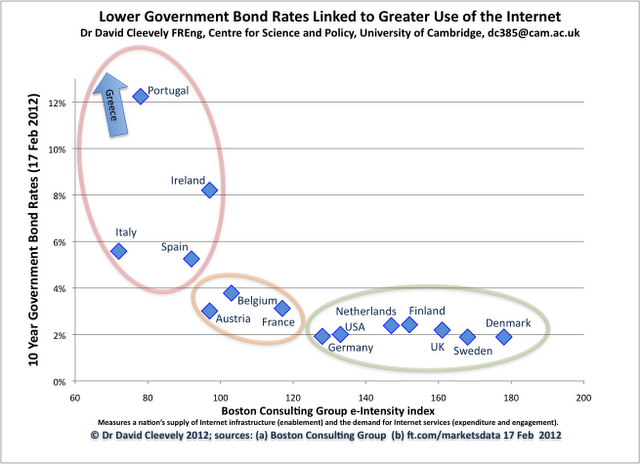

He was. Yesterday he and his son Matthew (who’s currently doing a PhD at Imperial College Business School) published this chart on their blog.

They plotted 10-year governmental bond rates against the Boston Consulting Group’s measure of “e-intensity” for countries in the Eurozone group.

Member states which have not had the capacity to adopt and develop the internet and ecommerce are those with skyrocketing risk premiums on their governments’ debt. These states form a distinct group: Portugal, Italy, Spain, Ireland and Greece (which is literally off the scale) – all with high debt premiums and an e-Intensity score of well under 100.

There are two other groups: those with high e-Intensity and low government bond rates such as Germany, Denmark and the UK, and a middle group (Belgium, Austria and France) with lower e-Intensity (100-120) and raised bond rates of 3-4%.

David and Matthew see two possible (and possibly non-exclusive) explanations for this:

1. Low e-Intensity indicates underlying structural problems: countries with high e-Intensity are those which have invested in modern processes, improved productivity and benefit from strong institutions. These are the countries that have lower borrowing costs, as they are best placed to grow their economies in the future.

and/or

2. e-Intensity (or what it represents) is a fundamental capability: countries which use the internet intensively can respond more flexibly to shocks and crises, instead of being weighed down by cumbersome 20th century processes and institutions.

So…

if you can get a country to invest in, use, and compete on the internet, then you must have either eliminated or minimised any underlying structural problems, or created a flexible and robust economy, or both.

Policymakers, please note.

Winter Sale

From web pages to bloatware

This morning’s Observer column.

In the beginning, webpages were simple pages of text marked up with some tags that would enable a browser to display them correctly. But that meant that the browser, not the designer, controlled how a page would look to the user, and there’s nothing that infuriates designers more than having someone (or something) determine the appearance of their work. So they embarked on a long, vigorous and ultimately successful campaign to exert the same kind of detailed control over the appearance of webpages as they did on their print counterparts – right down to the last pixel.

This had several consequences. Webpages began to look more attractive and, in some cases, became more user-friendly. They had pictures, video components, animations and colourful type in attractive fonts, and were easier on the eye than the staid, unimaginative pages of the early web. They began to resemble, in fact, pages in print magazines. And in order to make this possible, webpages ceased to be static text-objects fetched from a file store; instead, the server assembled each page on the fly, collecting its various graphic and other components from their various locations, and dispatching the whole caboodle in a stream to your browser, which then assembled them for your delectation.

All of which was nice and dandy. But there was a downside: webpages began to put on weight. Over the last decade, the size of web pages (measured in kilobytes) has more than septupled. From 2003 to 2011, the average web page grew from 93.7kB to over 679kB.

Quite a few good comments disagreeing with me. In the piece I mention how much I like Peter Norvig’s home page. Other favourite pages of mine include Aaron Sloman’s, Ross Anderson’s and Jon Crowcroft’s. In each case, what I like is the high signal-to-noise ratio.

How things change…

From Quentin’s blog.

There’s a piece in Business Insider based on an interesting fact first noted by MG Siegler. It’s this:

Apple’s iPhone business is bigger than Microsoft

Note, not Microsoft’s phone business. Not Windows. Not Office. But Microsoft’s entire business. Gosh.

As the article puts it:

The iPhone did not exist five years ago. And now it’s bigger than a company that, 15 years ago, was dragged into court and threatened with forcible break-up because it had amassed an unassailable and unthinkably profitable monopoly.

Wow! It seems only yesterday when Microsoft was the Evil Empire.

Regulating the Net

This evening I went to an interesting lecture on “The challenges of regulating the Internet” given by Simon Hampton, who is Google’s Director of Public Policy for Northern Europe. He was, he insisted, speaking only “in a personal capacity” and the audience, for the most part, took him at his word. His thesis was that in the technology business the greatest challenge is “the transition from scarcity to abundance” and that policy-makers haven’t taken this transition on board.

The metaphor he used to communicate abundance was the hoary story of the guy who invents the game of chess and who, when asked by the Emperor what he would like as a reward requests one grain of rice on the first square, two on the second, four on the third, etc. You know the story: according to Hampton by the time you get to the 64th square the amount of rice needed is the size of Everest. At which point my neighbour, David Cleevely, nudged me and said that he had worked it out and it was enough rice to cover the entire surface of the earth to a depth of nine inches! But it turned out that, for Hampton, the metaphor also has a chronological dimension: we’re just about half-way through the chessboard.

As befits someone who spends a lot of time in Brussels arguing with the anti-trust division of the European Commission, he has a pretty jaundiced view about what goes on there. But he made a good point, namely that the EU finds it easiest to regulate in new areas simply because regulating in existing areas means dealing with the reluctance of member states to change their existing laws. The result is that the Commission tends to to move too quickly to legislation (in the form of Directives) in emerging areas. Examples: the e-Money Directive; the e-Signature Directive; and, now, the Privacy Directive. Plus, of course, the idiocy of applying to the Net concepts designed for regulating TV broadcasters.

He then went on to list the four big companies that have “embraced abundance” — Apple, Amazon, Google and Facebook. They all operate on massive scale and have an interest in a bigger, freer Internet — though they have only recently woken up to the necessity of lobbying for this in the corridors of power (which is why the campaign against SOPA was so significant). In this context, Hampton claimed, size really matters — which is why moving into the second half of the chessboard is so significant. “The larger the haystack, the easier it is to find the needle.” (Not sure I followed his logic here.)

He was interesting on the subject of lobbying — which of course is a large part of his job and he defined it as trying to persuade legislators and policy-makers that their concept of the public interest should be realigned with those of industrial lobbies. People in declining industries tend to scream, and thereby gain public — and media and legislators’ — attention; in contrast, people in new, growing industries are too busy getting on with it — which probably explains why the big Internet companies were so slow to get their lobbying act together in Washington.

Finally, Hampton moved to the question of how to rethink policy-making in the context of abundance. In his opinion, there’s no need to change the broad objectives of public policy. The key switch that is needed is for policy-makers to “embrace abundance”.

What would that mean in practice? He suggested three general principles:

In the Q&A the main topics were (a) the cluelessness of legislators in relation to the Internet, and (b) concerns about privacy (hardly surprising given this). I made the point that legislators have always been clueless, so there’s nothing new here. (I quoted Bertrand Russell’s argument about why an MP couldn’t be more stupid than his constituents — “because the more stupid he is, the more stupid they are to elect him”.) The point is that in a representative democracy, Members of Parliament should not be expected to be knowledgeable about everything. It’s Parliament, as an institution, that needs to be knowledgeable — which is why we need evidence-based policy-making in relation to Internet regulation, intellectual property, etc. And, to date, we’ve had very little of that.

So the battle for an open Internet continues.

Form vs function in journalism

Just watched, despairingly, Newsnight on BBC2 grappling with the “death of the newspaper”. The peg for this feeble item was the arrival of the Digger at Luton airport. He has, it seems, flown the Atlantic in order to reassure his serfs and placemen at the Sun (a newspaper) that he is not going to close it down.** The Newsnight item followed the usual recipe: a short film report followed by a studio ‘discussion’ with three guests: a former tabloid editor, a doughty female hack (Joan Smith) and a young gel in impossibly high heels who is the UK head of the Huffington Post, a parasitic online creation that feeds on proper journalism.

The really annoying thing about the discussion was the way it failed to distinguish between format and function. The thing we need to preserve is not the newspaper (a form which was the product of a technological accident and a particular set of historical circumstances) but the function (provision of free, independent and responsible journalism). Once upon a time, publishing in print was the only way to ensure that the product of the function reached a public audience. But those days may be ending. The organisations traditionally known as “newspapers” need to transform themselves into journalistic outfits that produce a range of outputs, one of which — but only one of which — may be a printed paper.

The other thing that those who run newspapers need to realise is that digital technology implies businesses that earn much lower margins than analogue businesses did. In the old days, newspapers were often licences to print money. (The Digger’s Sun still is.) But in every industry where digital technology has taken hold, margins have shrunk. Could you support a newsroom of 120 journalists — plus all the material and distribution expenses that go with producing a print product — on the revenues that a newspaper website currently earns? Answer: no. But could you support a newsroom with 80 journalists and a purely online offering with the same revenues? Answer: possibly — provided you were prepared to settle for a modest return (say 5%) on investment.

**Later: Murdoch announced that he would be setting up a Sunday Sun — a continuation of the Screws of the World by other means. This was hailed by the mainstream media as a bold, defiant and possibly inspired tactic. I’m not so sure: it’s just possible that the Murdoch brand is now so toxic that the new gamble won’t wash. An alternative reading is that the old guy is finally losing his marbles. If the US laws against corrupt payments are triggered by the most recent developments (the arrest of Sun journalists on suspicion of making such payments to public officials) then the supine directors and shareholders of News International may finally be moved to, er, move.

iMicroscope

The product ecosystem that surrounds Apple iDevices reminds me of the Peace of God as described in the Bible — in that “it passeth all understanding”. This is an add-on for the iPhone4 (and 4S) that turns it into a microscope.

Well, sort-of.

The only thing I could find to test it when it arrived was a penny. This is how it came out.

Somehow, I don’t think it’ll make me into a latter-day Leeuwenhoek. But it’s fun.