Many thanks to the readers who pointed out that in the previous two editions I appeared to be confused about the month we’re now in!

Next?

Quote of the Day

”If economists wished to study the horse, they wouldn’t go and look at horses. They’d sit in their studies and say to themselves, ‘What would I do if I were a horse?’”

- Ely Devons

Musical alternative to the morning’s radio news

Handel | Concerto grosso B flat major op. 6 No. 7 HWV 325 | WDR Symphony Orchestra

Long Read of the Day

Philip K. Dick and the Fake Humans

Lovely essay in the Boston Review by Henry Farrell, arguing that we live in Philip K. Dick’s future, not George Orwell’s or Aldous Huxley’s.

This is not the dystopia we were promised. We are not learning to love Big Brother, who lives, if he lives at all, on a cluster of server farms, cooled by environmentally friendly technologies. Nor have we been lulled by Soma and subliminal brain programming into a hazy acquiescence to pervasive social hierarchies.

Dystopias tend toward fantasies of absolute control, in which the system sees all, knows all, and controls all. And our world is indeed one of ubiquitous surveillance. Phones and household devices produce trails of data, like particles in a cloud chamber, indicating our wants and behaviors to companies such as Facebook, Amazon, and Google. Yet the information thus produced is imperfect and classified by machine-learning algorithms that themselves make mistakes. The efforts of these businesses to manipulate our wants leads to further complexity. It is becoming ever harder for companies to distinguish the behavior which they want to analyze from their own and others’ manipulations.

This does not look like totalitarianism unless you squint very hard indeed. As the sociologist Kieran Healy has suggested, sweeping political critiques of new technology often bear a strong family resemblance to the arguments of Silicon Valley boosters. Both assume that the technology works as advertised, which is not necessarily true at all.

Standard utopias and standard dystopias are each perfect after their own particular fashion. We live somewhere queasier—a world in which technology is developing in ways that make it increasingly hard to distinguish human beings from artificial things….

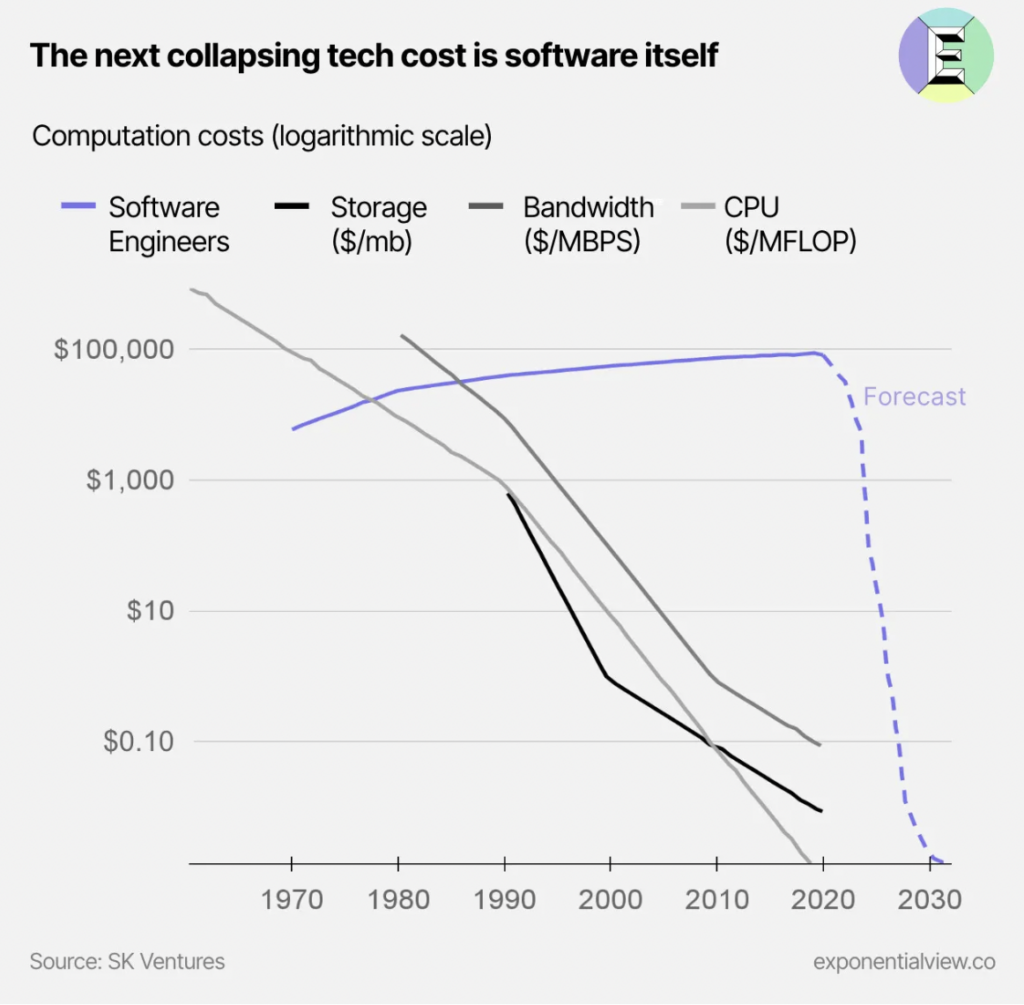

Chart of the Day

Note, though, that there’s no graph for the compute cost, which is a proxy for the carbon footprint of all this data processing.

Orwellian metaphors in ‘Generative AI’

Long, long ago, in his essay “Politics and the English Language”, George Orwell drew attention to the way language is used to conceal awkward truths “designed to make lies sound truthful and murder respectable, and to give an appearance of solidity to pure wind”). The tech industry (and its media and academic accomplices) are singularly adept at this. Thus machine-learning suddenly became “AI” even though it has nothing to do with intelligence, artificial or otherwise. By clothing in an acronym which originally denoted a serious quest for machines that could display signs of genuine intelligence the crude planet-heating, IP-infringing technology called machine-learning can be somehow made respectable.

Now, as Rachel Metz points out in a splendid blast in Bloomberg’s Tech Daily newsletter, the idea that a Large Language Model like ChatGPT or GPT-4 can “hallucinate” has become the default explanation anytime the machine messes up.

We humans can at times hallucinate: We may see, hear, feel, smell or taste things that aren’t truly there. It can happen for all sorts of reasons (illness, exhaustion, drugs).

But…

Companies across the industry have applied this concept to the new batch of extremely powerful but still flawed chatbots. Hallucination is listed as a limitation on the product page for OpenAI’s latest AI model, GPT-4. Google, which opened access to its Bard chatbot in March, reportedly brought up AI’s propensity to hallucinate in a recent interview

“Hallucinates” is a way of obscuring what’s going on. It’s also a way of encouraging humans to anthropomorphise LLMs.

Saying that a language model is hallucinating makes it sound as if it has a mind of its own that sometimes derails, said Giada Pistilli, principal ethicist at Hugging Face, which makes and hosts AI models.

“Language models do not dream, they do not hallucinate, they do not do psychedelics,” she wrote in an email. “It is also interesting to note that the word ‘hallucination’ hides something almost mystical, like mirages in the desert, and does not necessarily have a negative meaning as ‘mistake’ might.”

Great piece. Hats off to Ms Metz.

This Blog is also available as a daily email. If you think that might suit you better, why not subscribe? One email a day, Monday through Friday, delivered to your inbox. It’s free, and you can always unsubscribe if you conclude your inbox is full enough already!