One of our cats. I had been patiently explaining to her — for the umpteenth time — that she was not allowed to sit on this cushion. Her reaction confirms that she knew of PG Wodehouse’s explanation of the superiority complex that all cats manifest: they know that the ancient Egyptians worshipped them as gods. Either that or she’d been browsing “Dogs have owners; cats have staff”.

Monthly Archives: April 2019

Lessons of history

From a remarkable essay about Leonardo da Vinci by historian Ian Goldin1 in this weekend’s Financial Times, sadly behind a paywall:

“The third and most vital lesson of the Renaissance is that when things change more quickly, people get left behind more quickly. The Renaissance ended because the first era of global commerce and information revolution led to widening uncertainty and anxiety. The printing revolution provided populists with the means to challenge old authorities and channel the discontent that arose from the highly uneven distribution of the gains and losses from newly globalising commerce and accelerating technological change.

The Renaissance teaches us that progress cannot be taken for granted. The faster things change, the greater of people being left behind. And the greater their anger.

Sound familiar? And then…

Renaissance Florence was famously liberal-minded until a loud demagogue filled in the majority’s silence with rage and bombast. The firebrand preacher Girolamo Savonarola tapped into the fear that citizens felt about the pace of change and growing inequality, as well as the widespread anger toward the rampant corruption of the elite. Seizing on the new capacity for cheap print, he pioneered the political pamphlet, offering his followers the prospect of an afterlife in heaven while their opponents were condemned to hell. His mobilisation of indignation — combined with straightforward thuggery — deposed the Medicis, following which he launched a campaign of public purification, symbolised by the burning of books, cosmetics, jewellery, musical instruments and art, culminating in the 1497 Bonfire of the Vanities”.

Now of course history doesn’t really repeat itself. Still… some of this seems eerily familiar.

-

Also co-author of *The Age of Discovery:Navigating the Risks and Rewards of Our New Renaissance. ↩

StreetView leads us down some unexpected pathways

This morning’s Observer column:

Street View was a product of Google’s conviction that it is easier to ask for forgiveness than for permission, an assumption apparently confirmed by the fact that most jurisdictions seemed to accept the photographic coup as a fait accompli. There was pushback in a few European countries, notably Germany and Austria, with citizens demanding that their properties be blurred out; there was also a row in 2010 when it was revealed that Google had for a time collected and stored data from unencrypted domestic wifi routers. But broadly speaking, the company got away with its coup.

Most of the pushback came from people worried about privacy. They objected to images showing men leaving strip clubs, for example, protesters at an abortion clinic, sunbathers in bikinis and people engaging in, er, private activities in their own backyards. Some countries were bothered by the height of the cameras – in Japan and Switzerland, for example, Google had to lower their height so they couldn’t peer over fences and hedges.

These concerns were what one might call first-order ones, ie worries triggered by obvious dangers of a new technology. But with digital technology, the really transformative effects may be third- or fourth-order ones. So, for example, the internet leads to the web, which leads to the smartphone, which is what enabled Uber. And in that sense, the question with Street View from the beginning was: what will it lead to – eventually?

One possible answer emerged last week…

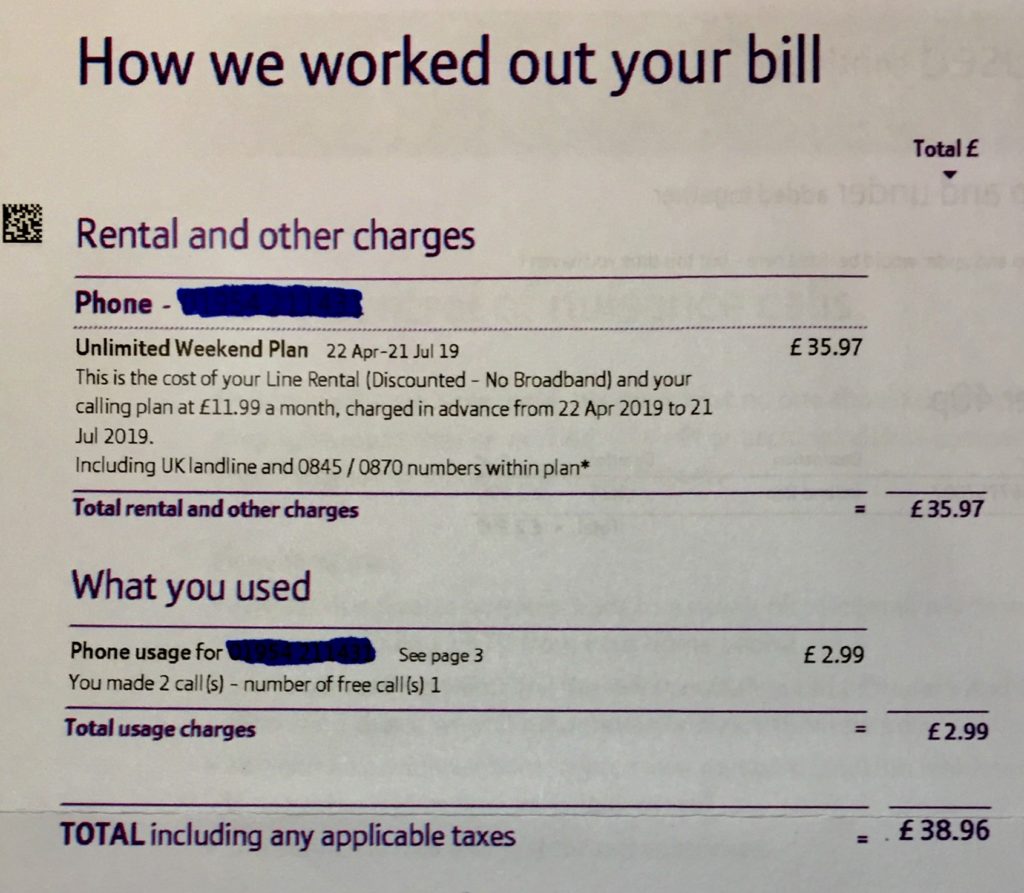

Do I really need a landline?

Quote of the Day

“The problem will never be solved, if solving it means getting rid of all the bad stuff, because we can’t agree on what the bad stuff is. Knowing that things won’t be perfect, what do we feel is most desirable? A system that errs on the side of caution, or one that errs on the side of being permissive?”

Rasmus Nielsen, Reuters Institute, Oxford.

So what happened to “don’t be evil”? Do you have to ask?

From Wired:

Two employee activists at Google say they have been retaliated against for helping to organize a walkout among thousands of Google workers in November, and are planning a “town hall” meeting on Friday for others to discuss alleged instances of retaliation.

In a message posted to many internal Google mailing lists Monday, Meredith Whittaker, who leads Google’s Open Research, said that after the company disbanded its external AI ethics council on April 4, she was told that her role would be “changed dramatically.” Whittaker said she was told that, in order to stay at the company, she would have to “abandon” her work on AI ethics and her role at AI Now Institute, a research center she co-founded at New York University.

Claire Stapleton, another walkout organizer and a 12-year veteran of the company, said in the email that two months after the protest she was told she would be demoted from her role as marketing manager at YouTube and lose half her reports. After escalating the issue to human resources, she said she faced further retaliation. “My manager started ignoring me, my work was given to other people, and I was told to go on medical leave, even though I’m not sick,” Stapleton wrote. After she hired a lawyer, the company conducted an investigation and seemed to reverse her demotion. “While my work has been restored, the environment remains hostile and I consider quitting nearly every day,” she wrote.

The only thing that’s surprising about this is that anybody should be surprised. Google is a corporation, and therefore a sociopathic entity that does only what’s in its interests. And having a senior AI researcher co-found an independent institute that is doing good work interrogating the ethical basis of AI is definitely NOT in the company’s interests.

Footnote: Famously, Google’s unofficial motto was “don’t be evil.” But that’s over, according to the code of conduct that the company distributes to its employees. According to Gizmodo, archives hosted by the Wayback Machine show that the phrase was removed sometime in late April or early May 2018.

Paris in motion

Paris in Motion 1 from Mayeul Akpovi on Vimeo.

Easter Eggs

Toxic tech?

This morning’s Observer column:

The headline above an essay in a magazine published by the Association of Computing Machinery (ACM) caught my eye. “Facial recognition is the plutonium of AI”, it said. Since plutonium – a by-product of uranium-based nuclear power generation – is one of the most toxic materials known to humankind, this seemed like an alarmist metaphor, so I settled down to read.

The article, by a Microsoft researcher, Luke Stark, argues that facial-recognition technology – one of the current obsessions of the tech industry – is potentially so toxic for the health of human society that it should be treated like plutonium and restricted accordingly. You could spend a lot of time in Silicon Valley before you heard sentiments like these about a technology that enables computers to recognise faces in a photograph or from a camera…

Quote of the day

”The age of party democracy has passed. Although the parties themselves remain, they have become so disconnected from the wider society, and pursue a form of competition that is so lacking in meaning, that they no longer seem capable of sustaining democracy in its present form.”

Peter Mair, Ruling the Void