The five members of the BBC Technology team have each made a list.

Nothing really striking, I’m afraid — just the usual suspects: the iPhone and Facebook.

The five members of the BBC Technology team have each made a list.

Nothing really striking, I’m afraid — just the usual suspects: the iPhone and Facebook.

An interesting angle on why the BBC is having technical difficulties, written by someone who seems to be a former BBC engineer.

The problem is that the BBC doesn’t control its own technical infrastructure. In an act of staggering short-sightedness it was outsourced to Siemens as part of a much wider divesting of the BBC Technology unit. In typical fashion for the BBC, they managed to select a technology supplier without internet operations experience. We can only assume that this must have seemed like an acceptable risk to the towering intellects running the BBC at the time. Certainly the staff at ground level knew what this meant, and resigned en masse.

Several years later this puts the BBC in the unenviable situation of having an incumbent technology supplier which takes a least-possible-effort approach to running the BBC’s internet services. In my time at the BBC, critical operational tasks were known to take days or even weeks despite a contractual service level promising four hour response times. Actual code changes for deploying new applications were known to take months. An upgrade to provide less than a dozen Linux boxes for additional server capacity – a project that was over a year old when I joined the BBC – was still being debated by Siemens when I left, eighteen months later.

The BBC’s infrastructure is shockingly outdated, having changed only by fractions over the past decade. Over-priced Sun Enterprise servers running Solaris and Apache provide the front-end layer. This is round-robin load balanced, there’s no management of session state, no load-based connection pool. The front-end servers proxy to the application layer, which is a handful of Solaris machines running Perl 5.6 – a language that was superseded with the release of Perl 5.8 over five and a half years ago. Part of the reason for this is the bizarre insistence that any native modules or anything that can call code of any kind must be removed from the standard libraries and replaced with a neutered version of that library by a Siemens engineer.

Yes, that’s right, Siemens forks Perl to remove features that their engineers don’t like.

This means that developers working at the BBC might not be able to code against documented features or interfaces because Siemens can, at their sole discretion, remove or change code in the standard libraries of the sole programming language in use. It also means that patches to the language, and widely available modules from CPAN may be several major versions out of date – if they are available at all. The recent deployment of Template Toolkit to the BBC servers is one such example – Siemens took years and objected to this constantly, and when finally they assented to provide the single most popular template language for Perl, they removed all code execution functions from the language…

There are some interesting comments on the post.

Ed Felten has been musing about John McCain’s recent remark that the minor issues he might delegate to a vice-president include “information technology, which is the future of this nation’s economy.”

“If information technology really is so important”, asks Ed, “then why doesn’t it register as a larger blip on the national political radar?”

One reason, he thinks, is that

many of the most important tech policy questions turn on factual, rather than normative, grounds. There is surprisingly wide and surprisingly persistent reluctance to acknowledge, for example, how insecure voting machines actually are, but few would argue with the claim that extremely insecure voting machines ought not to be used in elections.

On net neutrality, to take another case, those who favor intervention tend to think that a bad outcome (with network balkanization and a drag on innovators) will occur under a laissez-faire regime. Those who oppose intervention see a different but similarly negative set of consequences occurring if regulators do intervene. The debate at its most basic level isn’t about the goodness or badness of various possible outcomes, but is instead about the relative probabilities that those outcomes will happen. And assessing those probabilities is, at least arguably, a task best entrusted to experts rather than to the citizenry at large.

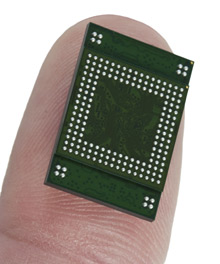

Intel photograph.

From Technology Review…

Since it found its way out of the lab in the late 1990s, flash memory has revolutionized consumer electronics. Because flash-memory chips are smaller, more rugged, and more energy efficient than magnetic hard disks, they have been the ideal replacement for hard drives in handheld devices such as MP3 players, and even in some high-end laptops. Flash is a solid-state memory technology, which means that it has no moving parts and stores data using silicon transistors like those found in microprocessor chips. Because it uses microprocessor technology, it also roughly follows Moore’s Law, the prediction that the number of transistors on a chip doubles about every two years. For processors, this means that they get faster, but for flash-memory chips, it means that data storage doubles. And the market has responded to flash’s burgeoning capacity: in 1999, the flash-memory market was nonexistent, but in 2007, it amounts to $15.2 billion.

At a press event, Don Larson, the marketing manager of Nand products at Intel, showed off the new chip. Called the Z-P140, it’s about the size of a thumbnail and weighs less than a drop of water. It currently comes in two- and four-gigabyte versions, which are available to manufacturers for use in handheld devices. The first products featuring the new chips will be available in January.

Since the new solid-state drive has standard control electronics built in, it can be combined with up to three other Intel chips that don’t have controllers, for a maximum of 16 gigabytes of storage, says Troy Winslow, flash marketing manager at Intel. While that may not seem like a lot compared with the 160-gigabyte hard drives in desktop computers, Larson pointed out that two gigabytes is enough to run some operating systems, such as Linux, along with software applications…

Seems daft to call it a ‘hard drive’ though.

Useful New York Times review of the current state of play.

“For most people,” [Google CEO Eric Schmidt] says, “computers are complex and unreliable,” given to crashing and afflicted with viruses. If Google can deliver computing services over the Web, then “it will be a real improvement in people’s lives,” he says.

To explain, Mr. Schmidt steps up to a white board. He draws a rectangle and rattles off a list of things that can be done in the Web-based cloud, and he notes that this list is expanding as Internet connection speeds become faster and Internet software improves. In a sliver of the rectangle, about 10 percent, he marks off what can’t be done in the cloud, like high-end graphics processing. So, in Google’s thinking, will 90 percent of computing eventually reside in the cloud?

“In our view, yes,” Mr. Schmidt says. “It’s a 90-10 thing.” Inside the cloud resides “almost everything you do in a company, almost everything a knowledge worker does.”

Google is taking aim at Wikipedia…

Google Knol is designed to allow anyone to create a page on any topic, which others can comment on, rate, and contribute to if the primary author allows. The service is in a private test beta. You can’t apply to be part of it, nor can the pubic [sic] see the pages that have been made. Google also stressed to me that what’s shown in the screenshots it provided might change and that the service might not launch at all…

If they do launch it, then the emerging comparisons with Wikipedia will be intriguing. GMSV has a thoughtful take on it.

Now you may be thinking, “Don’t we already have a collaborative, grass-roots, online encyclopedia … Wiki-something?” Indeed we do, as the Google guys are well aware, since Wikipedia entries tend to show up in that coveted area near the top of many, many pages of Google search results. But Google’s plan is based on a model that highlights individual expertise rather than collective knowledge. Unlike Wikipedia, where the contributors and editors remain in the background, each knol represents the view of a single author, who is featured prominently on the page. Readers can add comments, reviews, rankings, and alternative knols on the subject, but cannot directly edit the work of others, as in Wikipedia. And Google is offering another incentive — knol authors can choose to include ads with their offering and collect a cut of the revenue.

Some see this as a dagger in Wikipedia’s heart, but from a user perspective, I think they look more complementary than competitive, both with their weak and strong points. Search a topic on Wikipedia and you’ll get a single page of information, the contents of which could be the result of a lot of backroom back-and-forth, but which, when approached with a reasonable degree of skepticism, offers some quick answers and a good jumping off point to additional research. Search a topic in Google’s book of knowledge and it sounds like you’ll get your choice of competing knols all annotated with the comments of other users, and if there are disagreements or differing interpretations, they’ll be argued out in the open. So it’s the wisdom of crowds as created by readers vs. the wisdom of experts (or whoever is interested enough in glory and revenue to stake that claim) as ranked by readers. I can see the usefulness and drawbacks of both.

Where this does represent a threat to Wikipedia is in traffic, if Google knols start rising to the top of the search results and Wikipedia’s are pushed down. Google says it won’t be giving the knols any special rankings juice to make that happen, but the more Google puts its own hosted content in competition with what it indexes, the more people are going to be suspicious.

All kinds of interesting scenarios present themselves. It’s not just the wisdom of crowds vs. the wisdom of ‘experts’. It’s also the Jerffersonian ‘marketplace in ideas’ on steroids. Just imagine, for example, competing Knols on the Holocaust written by David Irving (and I’m sure he will submit one) vs. one written by Richard Evans or Deborah Lipstadt.

Hmmm… Yet another attempt to bridge the gap between paper and computer?

The Livescribe smartpen revolutionizes the act of writing by recording and linking audio to what you put on paper. Tap on words or drawings in your notes, and the smartpen replays recorded audio from the time you were writing. Transfer notes to your PC to backup, replay, and share them online.

Tom Coates is annoyed with Nick Carr. Here’s his summing up:

I think the thing that annoys me most about your piece here is that it’s the same rhetoric that you always take – that there’s something inherently suspicious about all this weird utopian rhetoric of these mad futurist, self-important technologists – that somehow none of it really applies to the rest of the world, because those people are so detached from reality, and that finally they’re all missing what’s really important.

All of which would be rather more convincing if you weren’t recapitulating what we’ve been saying for the last three years.

While I’m sure it helps promote you in the eyes of people with power and money to be suspicious and critical of new technology and set yourself up to be an impartial arbiter of what’s happening, free of hype and applying real-world values (or however it is you sell this warmed over stuff), I’d argue that you’re ultimately doing yourself a disservice. You just look ill-informed!

Ouch! That’s a bit harsh: Nick Carr is a bit of a contrarian, but sometimes he’s very perceptive (e.g. about the sharecropping metaphor as a way of thinking about MySpace and some user-generated content). But Tom Coates is right about the chunk of the intellectual spectrum to which Carr has staked a claim. The title of his first book — Does IT Matter? — says it all. He’s positioned himself as the ‘grown-up’ commentator.

SenseCam is a wearable digital camera that is designed to take photographs passively, without user intervention, while it is being worn. Unlike a regular digital camera or a cameraphone, SenseCam does not have a viewfinder or a display that can be used to frame photos. Instead, it is fitted with a wide-angle (fish-eye) lens that maximizes its field-of-view. This ensures that nearly everything in the wearer’s view is captured by the camera, which is important because a regular wearable camera would likely produce many uninteresting images.

At first sight this product of research at Microsoft’s Cambridge Lab seems banal. But it seems to have a really intriguing application. As Tech Review reports:

When Mrs. B was admitted to the hospital in March 2002, her doctors diagnosed limbic encephalitis, a brain infection that left her autobiographical memory in tatters. As a result, she can only recall around 2 percent of events that happened the previous week, and she often forgets who people are. But a simple device called SenseCam, a small digital camera developed by Microsoft Research, in Cambridge, U.K., dramatically improved her memory: she could recall 80 percent of events six weeks after they happened, according to the results of a recent study.

“Not only does SenseCam allow people to recall memories while they are looking at the images, which in itself is wonderful, but after an initial period of consolidation, it appears to lead to long-term retention of memories over many months, without the need to view the images repeatedly,” says Emma Berry, a neuropsychologist who works as a consultant to Microsoft.

The device is worn around the neck and automatically takes a wide-angle, low-resolution photograph every 30 seconds. It contains an accelerometer to stabilize the image and reduce blurriness, and it can be configured to take pictures in response to changes in movement, temperature, or lighting. “Because it has a wide-angle lens, you don’t have to point it at anything–it just happens to capture pretty much everything that the wearer can see,” says Steve Hodges, the manager of the Sensor and Devices Group at Microsoft Research, U.K.

The camera stores VGA-quality images as compressed .jpg files. It can fit 30,000 images onto a 1GB flash card. And run them as crude movies which are obviously good enough to jog the memory.

Interesting illustration of the utility of photography.

An essential link for every budding Web 2.0 entrepreneur — a TechBubble-friendly Company Name Generator. I’ve already got ‘Blogblab’ out of it. And — yep you guessed it! — it’s taken. Sigh.