Lovely video made by Liberty about the powers being legitimised in the Investigatory Powers Bill

Category Archives: Privacy

Why the Apple vs. the FBI case is important

This morning’s Observer column:

No problem, thought the Feds: we’ll just get a court order forcing Apple to write a special version of the operating system that will bypass this security provision and then download it to Farook’s phone. They got the order, but Apple refused point-blank to comply – on several grounds: since computer code is speech, the order violated the first amendment because it would be “compelled speech”; because being obliged to write the code amounted to “forced labour”, it would also violate the fifth amendment; and it was too dangerous because it would create a backdoor that could be exploited by hackers and nation states and potentially put a billion users of Apple devices at risk.

The resulting public furore offers a vivid illustration of how attempting a reasoned public debate about encryption is like trying to discuss philosophy using smoke signals. Leaving aside the purely clueless contributions from clowns like Piers Morgan and Donald Trump, and the sanctimonious platitudes from Obama downwards about “no company being above the law”, there is an alarmingly widespread failure to appreciate what is at stake here. We are building a world that is becoming totally dependent on network technology. Since there is no possibility of total security in such a world, then we have to use any tool that offers at least some measure of protection, for both individual citizens and institutions. In that context, strong encryption along the lines of the stuff that Apple and some other companies are building into their products and services is the only game in town.

Going Dark? Dream on.

This morning’s Observer column:

The Apple v FBI standoff continues to generate more heat than light, with both sides putting their case to “the court of public opinion” — which, in this case, is at best premature and at worst daft. Apple has just responded to the court injunction obliging it to help the government unlock the iPhone used by one of the San Bernadino killers with a barrage of legal arguments involving the first and fifth amendments to the US constitution. Because the law in the case is unclear (there seems to be only one recent plausible precedent and that dates from 1977), I can see the argument going all the way to the supreme court. Which is where it properly belongs, because what is at issue is a really big question: how much encryption should private companies (and individuals) be allowed to deploy in a networked world?

In the meantime, we are left with posturing by the two camps, both of which are being selective with the actualité, as Alan Clark might have said…

What the FBI vs Apple contest is really about

Wired nails it

But this isn’t about unlocking a phone; rather, it’s about ordering Apple to create a new software tool to eliminate specific security protections the company built into its phone software to protect customer data. Opponents of the court’s decision say this is no different than the controversial backdoor the FBI has been trying to force Apple and other companies to build into their software—except in this case, it’s an after-market backdoor to be used selectively on phones the government is investigating.

The stakes in the case are high because it draws a target on Apple and other companies embroiled in the ongoing encryption/backdoor debate that has been swirling in Silicon Valley and on Capitol Hill for the last two years. Briefly, the government wants a way to access data on gadgets, even when those devices use secure encryption to keep it private.

Yep. This is backdoor so by another route. It’s also forcing a company to do work for the government that, in this case, the government wants to do but claims it can’t. This will play big in China, Russia, Bahrain, Iran and other places too sinister to mention.

The FBI’s argument that the phone is vital for its investigation Seems weak. They already know everything they need to know, and the idea that the San Bernardino killers were serious ISIS stooges seems the prevalence of mass shootings in the US, and the say they conformed to type. What’s more likely is that the agency is playing politics. They’ve been arguing for yonks that they simply must have back doors. The San Bernardino killers presented them with a heaven-sent opportunity to leverage public outrage to force a tech company into conceding the backdoor principle.

Ad nauseam

Now here’s an interesting idea — a browser plug-in that silently clicks on every ad that appears on a web-page, thereby swamping — and confusing — the trackers, who have to make sense of what they’re getting back.

Surveillance, long-term effects of

Interesting post by a former Federal agent:

We all have a “private self” and a “public self.” It’s no secret that we all act and communicate differently when we are alone or in a setting with people with trust. In a free country, the decision to transition from that private self to the public self is largely within the control of the individual. When a free man or woman is home spending time with family he or she inhabits the private self. Typically one transitions to a public self when they grab the car keys and open the front door to head to work. There are things you may do or say while you were acting as that private self that you will no longer do or say at work, in your car, in an email, or on a business conference call.

Now, imagine living in a place where there is no distinction between the private self and the public self. Imagine a place where only the government has the key that unlocks the door between the private self and the public self…

Yahoo: turn off your ad-blockers or lose your email service

Well, well. The rise of ad-blocking is beginning to bite.

On Friday, dozens of people took to web forums and social media to complain that they were blocked from their Yahoo email accounts unless they switched off their ad blockers.

The issue seems to have first appeared early on Thursday when “portnoyd,” a user on the AdBlock Plus online support forum, was served a pop-up with an ultimatum: Turn off your ad blocker, or forget about getting to your email.

Yahoo confirmed the reports, which were discovered by Digiday. Yahoo, based in Sunnyvale, Calif., did not say how many users were affected.

“At Yahoo, we are continually developing and testing new product experiences,” Anne Yeh, a Yahoo spokeswoman, said in a statement. “This is a test we’re running for a small number of Yahoo Mail users in the U.S.”

Don’t you just love that guff about “developing and testing new product experiences”!

In the end, the targeted-ad-based business model is not sustainable. Wonder what will replace it.

Let’s turn the TalkTalk hacking scandal into a crisis

Yesterday’s Observer column:

The political theorist David Runciman draws a useful distinction between scandals and crises. Scandals happen all the time in society; they create a good deal of noise and heat, but in the end nothing much happens. Things go back to normal. Crises, on the other hand, do eventually lead to structural change, and in that sense play an important role in democracies.

So a good question to ask whenever something bad happens is whether it heralds a scandal or a crisis. When the phone-hacking story eventually broke, for example, many people (me included) thought that it represented a crisis. Now, several years – and a judicial enquiry – later, nothing much seems to have changed. Sure, there was a lot of sound and fury, but it signified little. The tabloids are still doing their disgraceful thing, and Rebekah Brooks is back in the saddle. So it was just a scandal, after all.

When the TalkTalk hacking story broke and I heard the company’s chief executive say in a live radio interview that she couldn’t say whether the customer data that had allegedly been stolen had been stored in encrypted form, the Runciman question sprang immediately to mind. That the boss of a communications firm should be so ignorant about something so central to her business certainly sounded like a scandal…

LATER Interesting blog post by Bruce Schneier. He opens with an account of how the CIA’s Director and the software developer Grant Blakeman had their email accounts hacked. Then,

Neither of them should have been put through this. None of us should have to worry about this.

The problem is a system that makes this possible, and companies that don’t care because they don’t suffer the losses. It’s a classic market failure, and government intervention is how we have to fix the problem.

It’s only when the costs of insecurity exceed the costs of doing it right that companies will invest properly in our security. Companies need to be responsible for the personal information they store about us. They need to secure it better, and they need to suffer penalties if they improperly release it. This means regulatory security standards.

The government should not mandate how a company secures our data; that will move the responsibility to the government and stifle innovation. Instead, government should establish minimum standards for results, and let the market figure out how to do it most effectively. It should allow individuals whose information has been exposed sue for damages. This is a model that has worked in all other aspects of public safety, and it needs to be applied here as well.

He’s right. Only when the costs of insecurity exceed the costs of doing it right will companies invest properly in it. And governments can fix that, quickly, by changing the law. For once, this is something that’s not difficult to do, even in a democracy.

The end of private reading is nigh

This morning’s Observer column about the Investigatory Powers bill:

The draft bill proposes that henceforth everyone’s clickstream – the URLs of every website one visits – is to be collected and stored for 12 months and may be inspected by agents of the state under certain arrangements. But collecting the stream will be done without any warrant. To civil libertarians who are upset by this new power, the government’s response boils down to this: “Don’t worry, because we’re just collecting the part of the URL that specifies the web server and that’s just ‘communications data’ (aka metadata); we’re not reading the content of the pages you visit, except under due authorisation.”

This is the purest cant, for two reasons…

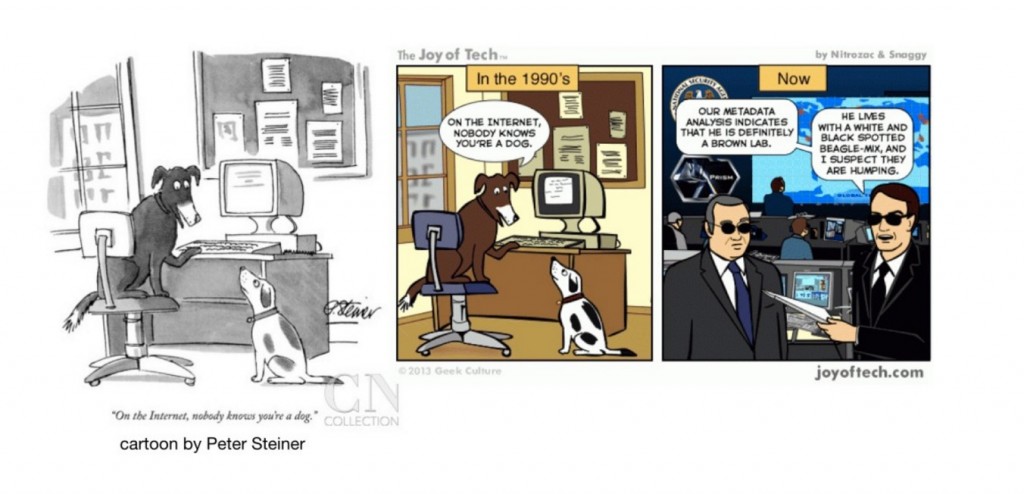

Anonymity on the Net — updated

Nice updating of the classic 1993 New Yorker cartoon to take in the Snowden era.

From a fascinating talk by Ethan Zuckerman.