Re-learning to see in B&W

I have an interesting new toy — a digital camera that only does black and white. On the face of it, this seems daft: after all if you have a camera with a colour sensor you can always de-saturate the image when processing it using PhotoShop or Lightroom and ‘convert’ it to B&W — thereby giving you the best of both worlds — colour and monochrome in the same package.

Er, not quite. That post-processed monochrome tends to be a bit, well, muddy.

The reason is that colour sensors have a Bayer filter on them which is what enables them to render images in colour. It was a wonderful invention, but it has some downsides — aliasing and absorbing light, among other things.

But if you don’t need (or want) colour images, you can dispense with the filter (and its downsides). Which is what my new toy does.

The result is that I’m now getting B&W images which are astonishingly vivid. In fact, they’re very like the images I used to get when I first became a serious photographer, processing my own B&W film.

What I’ve realised, though, is that after switching from analogue to digital photography a decade ago, I henceforth always worked in colour: all digital cameras were colour-natives, as it were.

But what I have now discovered is that, in that process, I had forgotten how to ‘see’ in B&W.

So I’m on a learning journey, again. Back to the future, you might say.

Quote of the Day

Happiness, n. An agreeable sensation arising from contemplating the misery of another.”

- Ambrose Bierce, in The Enlarged Devil’s Dictionary.

Musical alternative to the morning’s radio news

Mark Knopfler | Brothers In Arms | Berlin, 2007

Wonderful.

Long Read of the Day

Our new Deep Blue moment

The perennial debate about computing since the year dot has been whether the technology is an augmentation of human capability or a replacement for it. Many contemporary concerns about digital tech tend towards the replacement thesis, and this has recently reached another crescendo in the discussion about LLMs and ‘Generative AI’.

So here’s an essay by Francisco Toro which takes its inspiration from an earlier moment of existential angst — when Garry Kasparov was defeated by the IBM computer Deep Blue.

We’re living a new, much broader Deep Blue moment, when the basic boundary lines between the outer limits of what machines and humans can do are suddenly in flux. Only this time, the people directly concerned aren’t just a few dozen grandmasters in the rarified world of top-level chess. This time, it’s everyone.

All of which left me wondering: can that original Deep Blue moment inform our current vertigo?

Read on. It’s interesting.

ChatGPT, etc.

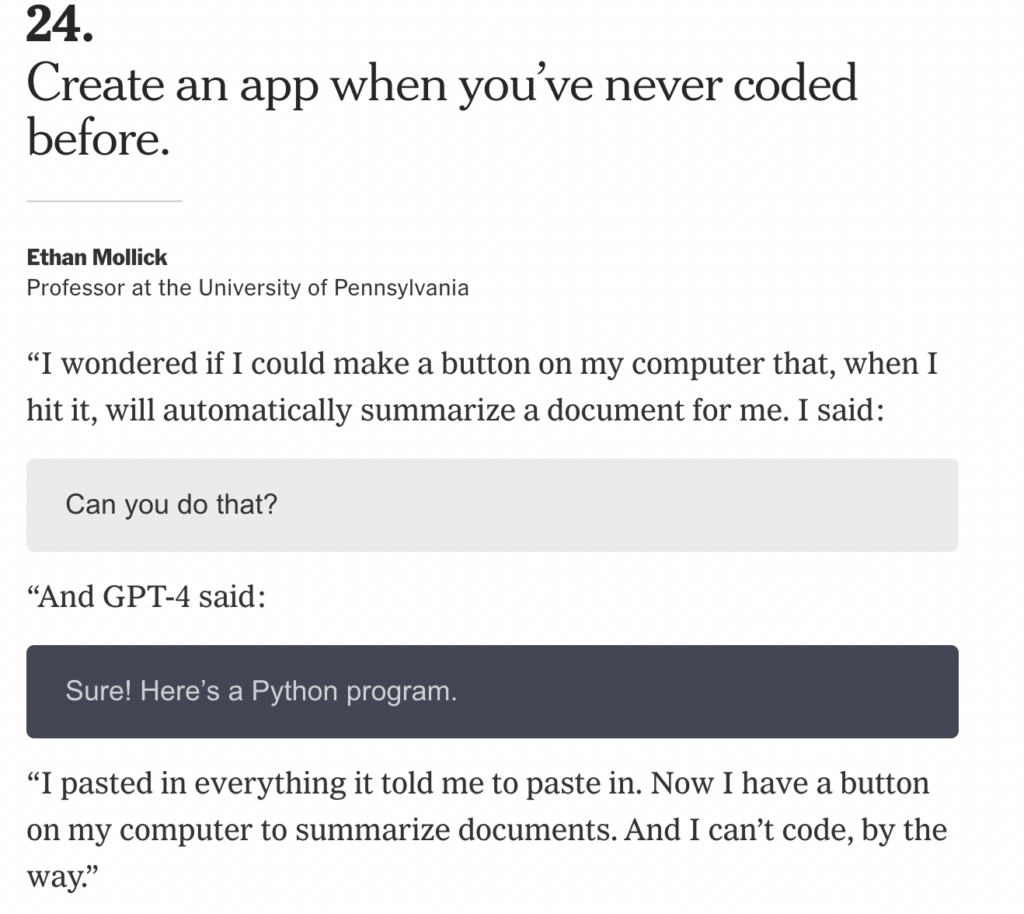

The New York Times has an article listing 35 ways in which people use ChatGPT or other ‘Generative AI’ systems to do useful things for them.

No. 24 is from Ethan Mollick of the University of Pennsylvania who, from the moment that ChatGPT first appeared has been an enthusiastic advocate for it.

Brad DeLong is not impressed:

“Code without knowing how to code”: ask Chat GPT4 to code something even moderately complex, copy and paste the result into an environment, and run it, and odds are it will not work without at least some debugging. What software copilots allow you to do is to remove the book you had at your left hand that you frantically paged through trying to figure out what the exact format for that is in this computer language, and to avoid the visits to confusing Stack Overflow threads. You still have to “know how” in the sense of knowing how to set up code architecture, know when something is close enough to correct for it to be worthwhile running it to get an error message, and know how to debug. Since nearly all of us are much better at architecture, recognizing that it is close, and debugging than we are at actually writing stuff ex nihilo, it is an enormous productivity amplification for programmers.

But it does not allow people “to code without knowing how to code”, and nobody who actually knows anything about it would say that. And yet they do…

OK. Maybe the problem is that they believed Ethan Mollick when he said “I can’t code” when what he meant was “I have a day job, and can’t code well”? But still…

Readers write…

My memoir the other day about seeing JFK in the flesh moved Anne Kirkman (Whom God Preserve) to write:

I can’t resist commenting on your post about JFK, as I had a very sinilar experience wih Bobby Kennedy. I came out of a shop in the Cornmarket in Oxford to find a small crowd surrounding a car on the roof of which Bobby K. was standing. My memories of him tally with yours of his brother, except there was no speech. The effect of his presence was almost like a physical shock. I’ve never come across such a charismatic person again. How did they do it?

And Trevor Mudge, a computer scientist, writes apropos my choice of John Horgan’s diatribe against sceptics,…

I don’t think John Horgan is correct to refer to Krauss as a “hack physicist” unless one thinks a PhD in theoretic physics from MIT qualifies one as a hack. He may have views that Horgan — a “famous” journalist — does not agree with, but I cannot agree that with Horgan’s characterization. The excerpt of Horgan’s that you quote make use of ad hominen attacks on Krauss and Dawkins, which to my mind detracts strongly from Horgan’s point. As a point of reference, Horgan does not have a great reputation among scientists and mathematicians as far as I can tell https://www.ams.org/notices/199802/bookrev-hoffman.pdf.

Errata

In yesterday’s edition Rebecca Solnit was wrongly identified as ‘Rebecca Solent’. The culprit was Apple autocorrect, but also me because I failed to spot the mistake before publishing. Apologies to her, and to you, dear reader. And thanks to Mark Liebenrood for pointing it out.

This Blog is also available as a daily email. If you think that might suit you better, why not subscribe? One email a day, Monday through Friday, delivered to your inbox. It’s free, and you can always unsubscribe if you conclude your inbox is full enough already!