Things to come

It is, after all, the 1st of March.

Quote of the Day

When you make motion pictures, each picture is a life unto itself. When you finish and the picture is over, there’s an understanding, a realisation that we’ll never be assembled this way again. That these relationships are severed forever and ever. And each of these films is a little life.

- John Huston

I thought of this as the credits rolled at my first viewing of his beautiful film, The Dead. It was his last movie, and he directed it from a wheelchair.

Musical alternative to the morning’s radio news

Erik Satie | Gymnopédie No.1

Long Read of the Day

Developing AI Like Raising Kids

This is a transcript of a remarkable conversation between two remarkable people — Alison Gopnik and Ted Chiang. It’s the most insightful thing I’ve read on the craziness of the current conviction of the AI crowd (Altman & Co) that extrapolation of machine-learning technology will one day get us to human-level intelligence.

Here’s a snatch of one part of the conversation that gives a flavour of the interaction;

Chiang: One of the guiding questions for me when I was writing Lifecycle of Software Objects was “How do you make a person?” At some level, it seems like a simple thing, but the more you think about it, you realize that it is the hardest job in the world. It is maybe the job that requires the most wrestling with difficult ethical questions, but the fact that so many people raise children makes it very easy to devalue it. We tend to congratulate people who have written a novel or something like that, because relatively few people write novels. A lot of people have children! A lot of people raise children to adulthood! And what they have accomplished is something incredible.

Gopnik: Just in terms of the cognitive difficulty level, it’s an amazing accomplishment. One of the things that we’ve been thinking about in the context of the Social Science Group is that the very structure of what it means to raise a person is so different from the structure of almost everything else that we do. So usually what we do is we have some set of goals, we produce a bunch of actions, insofar as our actions lead to our goals, we think that we’ve been successful. Insofar as they don’t, we don’t. But of course, if you’re trying to create a person, the point is that you’re not trying to achieve your goals, you’re trying to give them autonomy and resources that will let them achieve their own goals, and even let them formulate their own goals.

If you are puzzled by the current ‘AI’ madness, do read this transcript.

Having read it, I bought Exhalation the collection of Ted Chiang’s stories which includes the novella, Lifecycle of Software Objects, that he mentions in the conversation.

I’ve been reading his non-fiction essays on AI for a while — e.g.”Silicon Valley is Turning into its Own Worst Fear and ”Will AI Become the New McKinsey?”. Like Gopnik, he’s one of the most perceptive thinkers about this stuff.

Books, etc.

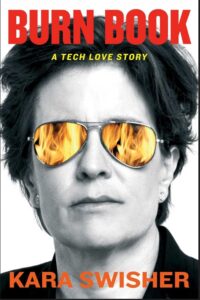

Kara Swisher’s new book

The New York Times’s reviewer is not impressed. Here’s how he sums it up:

Her forthrightness goes some way in helping us believe that “Burn Book” doesn’t merely represent a convenient pivot, as they say, from Tech royalty to Tech heretic at a time when strident industry criticism is trending hard. But “Burn Book”’s fatal flaw, the reason it can never fully dispel the whiff of opportunism that dooms any memoir, is that Swisher never shows in any convincing detail how her entanglement with Silicon Valley clouded her judgment. The story of her change of heart is thus undercut by the self-aggrandizing portrait that rests stubbornly at its core. “At least now we know the problems,” Swisher writes of Silicon Valley at the end of “Burn Book.” Do we?

My commonplace booklet

From John Thornhill in the FT of 3 February:

The tendency of generative artificial intelligence systems to “hallucinate” — or simply make stuff up — can be zany and sometimes scary, as one New Zealand supermarket chain found to its cost. After Pak’nSave released a chatbot last year offering recipe suggestions to thrifty shoppers using leftover ingredients, its Savey Meal-bot recommended one customer make an “aromatic water mix” that would have produced chlorine gas.

Lawyers have also learnt to be wary of the output of generative AI models, given their ability to invent wholly fictitious cases. A recent Stanford University study of the responses generated by three state of the art generative AI models to 200,000 legal queries found hallucinations were “pervasive and disturbing”. When asked specific, verifiable questions about random federal court cases, OpenAI’s ChatGPT 3.5 hallucinated 69 per cent of the time while Meta’s Llama 2 model hit 88 per cent.

This Blog is also available as an email three days a week. If you think that might suit you better, why not subscribe? One email on Mondays, Wednesdays and Fridays delivered to your inbox at 6am UK time. It’s free, and you can always unsubscribe if you conclude your inbox is full enough already!